DATA TRANSFORMATION

In data science, transformation means changing data from its original form into a new form that is more suitable for analysis, modeling, or interpretation.🔹 Step 1: Import libraries¶

In [2]:

import pandas as pd

import numpy as np

🔹 Step 2: Load the dataset¶

In [19]:

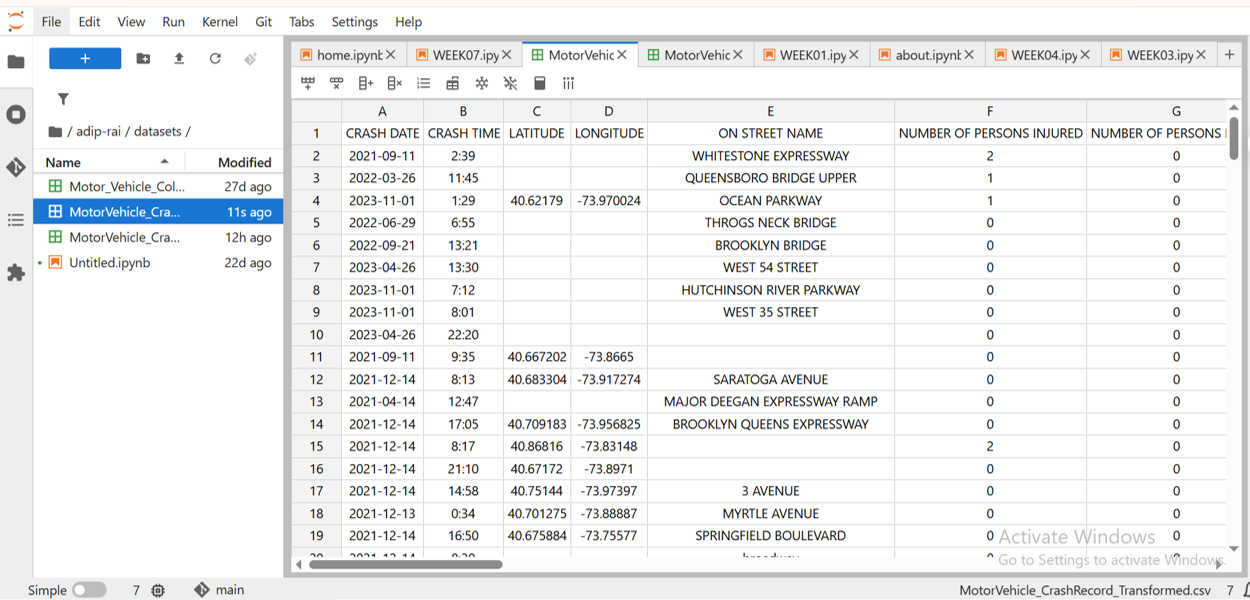

df = pd.read_csv("datasets/MotorVehicle_CrashRecord.csv")

df.columns = df.columns.str.strip()

print(df.columns)

# View basic info

df.head(201)

Index(['CRASH DATE', 'CRASH TIME', 'BOROUGH', 'LATITUDE', 'LONGITUDE',

'ON STREET NAME', 'NUMBER OF PERSONS INJURED',

'NUMBER OF PERSONS KILLED', 'NUMBER OF PEDESTRIANS INJURED',

'NUMBER OF PEDESTRIANS KILLED', 'NUMBER OF CYCLIST INJURED',

'NUMBER OF CYCLIST KILLED', 'NUMBER OF MOTORIST INJURED',

'NUMBER OF MOTORIST KILLED', 'CONTRIBUTING FACTOR VEHICLE',

'VEHICLE TYPE CODE 1', 'VEHICLE TYPE CODE 2', 'VEHICLE TYPE CODE 3',

'VEHICLE TYPE CODE 4', 'VEHICLE TYPE CODE 5'],

dtype='object')

Out[19]:

| CRASH DATE | CRASH TIME | BOROUGH | LATITUDE | LONGITUDE | ON STREET NAME | NUMBER OF PERSONS INJURED | NUMBER OF PERSONS KILLED | NUMBER OF PEDESTRIANS INJURED | NUMBER OF PEDESTRIANS KILLED | NUMBER OF CYCLIST INJURED | NUMBER OF CYCLIST KILLED | NUMBER OF MOTORIST INJURED | NUMBER OF MOTORIST KILLED | CONTRIBUTING FACTOR VEHICLE | VEHICLE TYPE CODE 1 | VEHICLE TYPE CODE 2 | VEHICLE TYPE CODE 3 | VEHICLE TYPE CODE 4 | VEHICLE TYPE CODE 5 | |

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| 0 | 9/11/2021 | 2:39 | NaN | NaN | NaN | WHITESTONE EXPRESSWAY | 2 | 0 | 0 | 0 | 0 | 0 | 2 | 0 | Aggressive Driving/Road Rage | Sedan | Sedan | NaN | NaN | NaN |

| 1 | 3/26/2022 | 11:45 | NaN | NaN | NaN | QUEENSBORO BRIDGE UPPER | 1 | 0 | 0 | 0 | 0 | 0 | 1 | 0 | Pavement Slippery | Sedan | NaN | NaN | NaN | NaN |

| 2 | 11/1/2023 | 1:29 | BROOKLYN | 40.621790 | -73.970024 | OCEAN PARKWAY | 1 | 0 | 0 | 0 | 0 | 0 | 1 | 0 | Unspecified | Moped | Sedan | Sedan | NaN | NaN |

| 3 | 6/29/2022 | 6:55 | NaN | NaN | NaN | THROGS NECK BRIDGE | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | Following Too Closely | Sedan | Pick-up Truck | NaN | NaN | NaN |

| 4 | 9/21/2022 | 13:21 | NaN | NaN | NaN | BROOKLYN BRIDGE | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | Passing Too Closely | Station Wagon/Sport Utility Vehicle | NaN | NaN | NaN | NaN |

| ... | ... | ... | ... | ... | ... | ... | ... | ... | ... | ... | ... | ... | ... | ... | ... | ... | ... | ... | ... | ... |

| 195 | 3/23/2022 | 21:00 | QUEENS | 40.776764 | -73.848015 | NaN | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | Unspecified | Sedan | NaN | NaN | NaN | NaN |

| 196 | 3/26/2022 | 12:00 | QUEENS | 40.747498 | -73.913090 | NaN | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | Unspecified | Sedan | NaN | NaN | NaN | NaN |

| 197 | 3/26/2022 | 13:28 | BROOKLYN | 40.701637 | -73.942276 | GRAHAM AVENUE | 1 | 0 | 0 | 0 | 0 | 0 | 1 | 0 | Following Too Closely | Station Wagon/Sport Utility Vehicle | Station Wagon/Sport Utility Vehicle | NaN | NaN | NaN |

| 198 | 3/26/2022 | 19:12 | QUEENS | 40.693490 | -73.826546 | NaN | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | Unsafe Speed | Station Wagon/Sport Utility Vehicle | Sedan | NaN | NaN | NaN |

| 199 | 3/26/2022 | 21:29 | QUEENS | 40.726463 | -73.859430 | WETHEROLE STREET | 1 | 0 | 1 | 0 | 0 | 0 | 0 | 0 | Unsafe Speed | Bike | NaN | NaN | NaN | NaN |

200 rows × 20 columns

🔹 Step 3: Inspect data types & missing values¶

In [20]:

df.info()

df.isna().sum()

<class 'pandas.core.frame.DataFrame'> RangeIndex: 200 entries, 0 to 199 Data columns (total 20 columns): # Column Non-Null Count Dtype --- ------ -------------- ----- 0 CRASH DATE 200 non-null object 1 CRASH TIME 200 non-null object 2 BOROUGH 124 non-null object 3 LATITUDE 169 non-null float64 4 LONGITUDE 169 non-null float64 5 ON STREET NAME 151 non-null object 6 NUMBER OF PERSONS INJURED 200 non-null int64 7 NUMBER OF PERSONS KILLED 200 non-null int64 8 NUMBER OF PEDESTRIANS INJURED 200 non-null int64 9 NUMBER OF PEDESTRIANS KILLED 200 non-null int64 10 NUMBER OF CYCLIST INJURED 200 non-null int64 11 NUMBER OF CYCLIST KILLED 200 non-null int64 12 NUMBER OF MOTORIST INJURED 200 non-null int64 13 NUMBER OF MOTORIST KILLED 200 non-null int64 14 CONTRIBUTING FACTOR VEHICLE 198 non-null object 15 VEHICLE TYPE CODE 1 196 non-null object 16 VEHICLE TYPE CODE 2 123 non-null object 17 VEHICLE TYPE CODE 3 11 non-null object 18 VEHICLE TYPE CODE 4 3 non-null object 19 VEHICLE TYPE CODE 5 0 non-null float64 dtypes: float64(3), int64(8), object(9) memory usage: 31.4+ KB

Out[20]:

CRASH DATE 0 CRASH TIME 0 BOROUGH 76 LATITUDE 31 LONGITUDE 31 ON STREET NAME 49 NUMBER OF PERSONS INJURED 0 NUMBER OF PERSONS KILLED 0 NUMBER OF PEDESTRIANS INJURED 0 NUMBER OF PEDESTRIANS KILLED 0 NUMBER OF CYCLIST INJURED 0 NUMBER OF CYCLIST KILLED 0 NUMBER OF MOTORIST INJURED 0 NUMBER OF MOTORIST KILLED 0 CONTRIBUTING FACTOR VEHICLE 2 VEHICLE TYPE CODE 1 4 VEHICLE TYPE CODE 2 77 VEHICLE TYPE CODE 3 189 VEHICLE TYPE CODE 4 197 VEHICLE TYPE CODE 5 200 dtype: int64

🔹 Step 4: DATA TRANSFORMATION STEPS¶

4.1 Convert Date column (if available)¶

In [26]:

df['CRASH DATE'] = pd.to_datetime(df['CRASH DATE'], errors='coerce')

# Extract useful features

df['Year'] = df['CRASH DATE'].dt.year

df['Month'] = df['CRASH DATE'].dt.month

df['Day'] = df['CRASH DATE'].dt.day

df['Hour'] = df['CRASH DATE'].dt.hour

4.2 Transform Hour column (important for density analysis)¶

In [27]:

df['Hour'] = pd.to_numeric(df['Hour'], errors='coerce')

df = df.dropna(subset=['Hour'])

4.3 Create Time-of-Day feature (Feature Engineering)¶

In [28]:

def time_of_day(hour):

if 0 <= hour < 6:

return 'Late Night'

elif 6 <= hour < 12:

return 'Morning'

elif 12 <= hour < 18:

return 'Afternoon'

else:

return 'Evening'

df['Time_of_Day'] = df['Hour'].apply(time_of_day)

4.4 Encode categorical variables (Borough / Location)¶

In [38]:

df = pd.get_dummies(df, columns=['Borough'], drop_first=True)

--------------------------------------------------------------------------- KeyError Traceback (most recent call last) Cell In[38], line 1 ----> 1 df = pd.get_dummies(df, columns=['Borough'], drop_first=True) File /opt/conda/lib/python3.13/site-packages/pandas/core/reshape/encoding.py:170, in get_dummies(data, prefix, prefix_sep, dummy_na, columns, sparse, drop_first, dtype) 168 raise TypeError("Input must be a list-like for parameter `columns`") 169 else: --> 170 data_to_encode = data[columns] 172 # validate prefixes and separator to avoid silently dropping cols 173 def check_len(item, name: str): File /opt/conda/lib/python3.13/site-packages/pandas/core/frame.py:4119, in DataFrame.__getitem__(self, key) 4117 if is_iterator(key): 4118 key = list(key) -> 4119 indexer = self.columns._get_indexer_strict(key, "columns")[1] 4121 # take() does not accept boolean indexers 4122 if getattr(indexer, "dtype", None) == bool: File /opt/conda/lib/python3.13/site-packages/pandas/core/indexes/base.py:6212, in Index._get_indexer_strict(self, key, axis_name) 6209 else: 6210 keyarr, indexer, new_indexer = self._reindex_non_unique(keyarr) -> 6212 self._raise_if_missing(keyarr, indexer, axis_name) 6214 keyarr = self.take(indexer) 6215 if isinstance(key, Index): 6216 # GH 42790 - Preserve name from an Index File /opt/conda/lib/python3.13/site-packages/pandas/core/indexes/base.py:6261, in Index._raise_if_missing(self, key, indexer, axis_name) 6259 if nmissing: 6260 if nmissing == len(indexer): -> 6261 raise KeyError(f"None of [{key}] are in the [{axis_name}]") 6263 not_found = list(ensure_index(key)[missing_mask.nonzero()[0]].unique()) 6264 raise KeyError(f"{not_found} not in index") KeyError: "None of [Index(['Borough'], dtype='object')] are in the [columns]"

4.5 Normalize numerical columns (Scaling)¶

In [31]:

from sklearn.preprocessing import MinMaxScaler

scaler = MinMaxScaler()

df[['Hour']] = scaler.fit_transform(df[['Hour']])

4.6 Log transformation (optional but powerful)¶

In [32]:

df['Log_Hour'] = np.log1p(df['Hour'])

🔹 Step 5: Save transformed dataset¶

In [34]:

df.to_csv("datasets/MotorVehicle_CrashRecord_Transformed.csv", index=False)

In [40]:

df.head(201)

Out[40]:

| CRASH DATE | CRASH TIME | LATITUDE | LONGITUDE | ON STREET NAME | NUMBER OF PERSONS INJURED | NUMBER OF PERSONS KILLED | NUMBER OF PEDESTRIANS INJURED | NUMBER OF PEDESTRIANS KILLED | NUMBER OF CYCLIST INJURED | ... | Year | Month | Day | Hour | Time_of_Day | BOROUGH_BROOKLYN | BOROUGH_MANHATTAN | BOROUGH_QUEENS | BOROUGH_STATEN ISLAND | Log_Hour | |

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| 0 | 2021-09-11 | 2:39 | NaN | NaN | WHITESTONE EXPRESSWAY | 2 | 0 | 0 | 0 | 0 | ... | 2021 | 9 | 11 | 0.0 | Late Night | False | False | False | False | 0.0 |

| 1 | 2022-03-26 | 11:45 | NaN | NaN | QUEENSBORO BRIDGE UPPER | 1 | 0 | 0 | 0 | 0 | ... | 2022 | 3 | 26 | 0.0 | Late Night | False | False | False | False | 0.0 |

| 2 | 2023-11-01 | 1:29 | 40.621790 | -73.970024 | OCEAN PARKWAY | 1 | 0 | 0 | 0 | 0 | ... | 2023 | 11 | 1 | 0.0 | Late Night | True | False | False | False | 0.0 |

| 3 | 2022-06-29 | 6:55 | NaN | NaN | THROGS NECK BRIDGE | 0 | 0 | 0 | 0 | 0 | ... | 2022 | 6 | 29 | 0.0 | Late Night | False | False | False | False | 0.0 |

| 4 | 2022-09-21 | 13:21 | NaN | NaN | BROOKLYN BRIDGE | 0 | 0 | 0 | 0 | 0 | ... | 2022 | 9 | 21 | 0.0 | Late Night | False | False | False | False | 0.0 |

| ... | ... | ... | ... | ... | ... | ... | ... | ... | ... | ... | ... | ... | ... | ... | ... | ... | ... | ... | ... | ... | ... |

| 195 | 2022-03-23 | 21:00 | 40.776764 | -73.848015 | NaN | 0 | 0 | 0 | 0 | 0 | ... | 2022 | 3 | 23 | 0.0 | Late Night | False | False | True | False | 0.0 |

| 196 | 2022-03-26 | 12:00 | 40.747498 | -73.913090 | NaN | 0 | 0 | 0 | 0 | 0 | ... | 2022 | 3 | 26 | 0.0 | Late Night | False | False | True | False | 0.0 |

| 197 | 2022-03-26 | 13:28 | 40.701637 | -73.942276 | GRAHAM AVENUE | 1 | 0 | 0 | 0 | 0 | ... | 2022 | 3 | 26 | 0.0 | Late Night | True | False | False | False | 0.0 |

| 198 | 2022-03-26 | 19:12 | 40.693490 | -73.826546 | NaN | 0 | 0 | 0 | 0 | 0 | ... | 2022 | 3 | 26 | 0.0 | Late Night | False | False | True | False | 0.0 |

| 199 | 2022-03-26 | 21:29 | 40.726463 | -73.859430 | WETHEROLE STREET | 1 | 0 | 1 | 0 | 0 | ... | 2022 | 3 | 26 | 0.0 | Late Night | False | False | True | False | 0.0 |

200 rows × 29 columns

In [ ]: