Week 7: transforms¶

The goal for this week is to find the most informative data representations

Experiment 1 - "listen" to the house market¶

In [1]:

import pandas as pd

import numpy as np

import matplotlib.pyplot as plt

df = pd.read_csv("datasets/House_Property_Sales_Time_Series.csv", usecols=["datesold", "price"],parse_dates=["datesold"])

# Group sales by month

yearly_grouped = df.groupby(pd.Grouper(key='datesold', freq='YE')).sum()

print(yearly_grouped)

# Map to 0-9 values

original_min = yearly_grouped['price'].min()

original_max = yearly_grouped['price'].max()

target_min = 0

target_max = 9

mapped = (((yearly_grouped['price'] - original_min) / (original_max - original_min)) * (target_max - target_min) + target_min).astype(int)

print(mapped.values)

price datesold 2007-12-31 76789450 2008-12-31 315547250 2009-12-31 707427239 2010-12-31 870123280 2011-12-31 925445775 2012-12-31 1026547544 2013-12-31 1172689133 2014-12-31 1696767719 2015-12-31 2284017698 2016-12-31 2482304198 2017-12-31 3051009584 2018-12-31 2548984623 2019-12-31 878345143 [0 0 1 2 2 2 3 4 6 7 9 7 2]

In [2]:

# Display basic chart

plt.plot(mapped.index, mapped.values,'o')

plt.xlabel('Years')

plt.ylabel('Sales')

plt.show()

In [3]:

# Play a different sound when the market grows, is stable or decline

import numpy as np

from IPython.display import Audio, display

digits = mapped.values

rate = 44100

def custom_tone(digit,duration,rate,amplitude):

frequencies = {

'1':(697,1209),'2':(697,2222),'3':(697,1477),

'4':(770,1209),'5':(770,3333),'6':(770,1477),

'7':(852,1209),'8':(852,6666),'9':(852,1477),

'*':(941,1209),'0':(941,1336),'#':(941,1477)}

low,high = frequencies[digit]

t = np.linspace(0,duration,int(rate*duration),endpoint=False)

tone = amplitude*(np.sin(2*np.pi*low*t)+np.sin(2*np.pi*high*t))

return tone

def generate_tone(digits,duration=0.3,silence=0.3,rate=44100,amplitude=0.5):

data = np.array([])

previous = 0

for digit in digits:

if (digit == previous):

tone = custom_tone('5',duration,rate,amplitude)

else:

if (digit > previous):

tone = custom_tone('8',duration,rate,amplitude)

else:

tone = custom_tone('2',duration,rate,amplitude)

previous = digit

silence = np.zeros(int(rate*duration))

data = np.concatenate((data,tone,silence))

return data

data = generate_tone(digits,rate=rate)

data = data/np.max(np.abs(data))*(2**15-1)

data = data.astype(np.int16)

display(Audio(data,rate=rate))

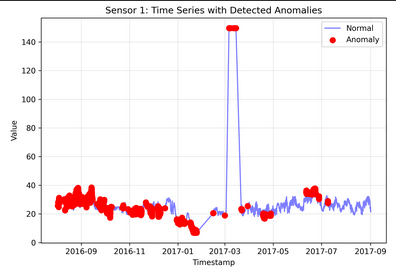

Experiment 2 - Improve raw sensor data¶

In [30]:

import pandas as pd

import numpy as np

import seaborn as sns

import matplotlib.pyplot as plt

df = pd.read_csv("datasets/sensor-fault-detection.csv", sep=';', parse_dates=["Timestamp"])

df.head()

Out[30]:

| Timestamp | SensorId | Value | |

|---|---|---|---|

| 0 | 2017-03-01 23:20:00+03:00 | 1 | 18.479807 |

| 1 | 2017-03-02 04:00:00+03:00 | 1 | 19.539112 |

| 2 | 2017-03-23 06:25:00+03:00 | 1 | 19.250198 |

| 3 | 2017-03-23 19:35:00+03:00 | 1 | 18.961285 |

| 4 | 2017-04-04 15:10:00+03:00 | 1 | 25.321623 |

In [31]:

df.shape

Out[31]:

(62629, 3)

In [32]:

print(df['SensorId'].unique())

df.describe()

[1]

Out[32]:

| SensorId | Value | |

|---|---|---|

| count | 62629.0 | 62629.000000 |

| mean | 1.0 | 24.203861 |

| std | 0.0 | 5.411599 |

| min | 1.0 | 6.886155 |

| 25% | 1.0 | 21.369419 |

| 50% | 1.0 | 24.550188 |

| 75% | 1.0 | 27.443794 |

| max | 1.0 | 149.601822 |

In [33]:

plt.figure(figsize=(10, 6))

plt.plot(df['Timestamp'], df['Value'], '.')

plt.title('Sensor Data')

plt.xlabel('Time')

plt.ylabel('Measurement')

plt.grid(True)

plt.xticks(rotation=45)

plt.tight_layout()

# we have outliers and some missing data!

# 8 outliers detected - all showing the exact same suspicious value of 149.6° (likely a sensor fault), occurring in March 2017.

In [38]:

# Check for missing values

print(f"\nMissing values:")

print(df.isnull().sum())

# Check for duplicates

duplicates = df.duplicated().sum()

print(f"\nDuplicate rows: {duplicates}")

if duplicates > 0:

df = df.drop_duplicates()

print(f"Removed {duplicates} duplicate rows")

# Sort by Timestamp and SensorId

df = df.sort_values('Timestamp').reset_index(drop=True)

# Remove any rows with missing values (if any)

df = df.dropna()

# Calculate Q1, Q3, and IQR

Q1 = df['Value'].quantile(0.25)

Q3 = df['Value'].quantile(0.75)

IQR = Q3 - Q1

# Define lower and upper bounds for outliers

lower_bound = Q1 - 1.5 * IQR

upper_bound = Q3 + 1.5 * IQR

print('Lower: ', lower_bound, ' Upper: ', upper_bound)

# Remove outliers

df = df[(df['Value'] >= lower_bound) & (df['Value'] <= upper_bound)]

plt.figure(figsize=(16, 6) )

plt.plot(df['Timestamp'], df['Value'], '.')

plt.title('Sensor Data - without outliers')

plt.xlabel('Time')

plt.ylabel('Measurement')

plt.grid(True)

plt.xticks(rotation=45)

plt.tight_layout()

# now we can see there are gaps with no readings !

# Define the start and end dates for filtering

start_date = '2017-04-15'

end_date = '2017-05-15'

# Filter the DataFrame

filtered_df = df.loc[(df['Timestamp'] >= start_date) & (df['Timestamp'] <= end_date)]

plt.figure(figsize=(16, 6) )

plt.plot(filtered_df['Timestamp'], filtered_df['Value'], '.')

plt.title('Sensor Data - one month zoom')

plt.xlabel('Time')

plt.ylabel('Measurement')

plt.grid(True)

plt.xticks(rotation=45)

plt.tight_layout()

# Define the start and end dates for filtering

start_date = '2017-04-29'

end_date = '2017-04-30'

# Filter the DataFrame

filtered_df = df.loc[(df['Timestamp'] >= start_date) & (df['Timestamp'] <= end_date)]

plt.figure(figsize=(16, 6) )

plt.plot(filtered_df['Timestamp'], filtered_df['Value'], '.')

plt.title('Sensor Data - one day zoom')

plt.xlabel('Time')

plt.ylabel('Measurement')

plt.grid(True)

plt.xticks(rotation=45)

plt.tight_layout()

Missing values: Timestamp 0 SensorId 0 Value 0 dtype: int64 Duplicate rows: 0 Lower: 12.835803025000002 Upper: 36.362971305

AI prompt: "Using the uploaded file, are there missing data for some days across the time range ?

AI answer: Yes, there are missing data for some days.

- 50 days completely missing (12.6% of the 396-day period)

- 347 days have data (87.4% coverage)

- 2 days with sparse data (only 5 readings each: Nov 19 & 21, 2016)

Key patterns:

- Average: 180.5 readings/day (~every 8 minutes)

- Most days have 276 readings (every 5 minutes)

- Maximum: 288 readings/day (every 5 minutes continuously)

Notable gaps:

- Early September 2016 (2 days)

- Mid-December 2016 (5 consecutive days)

- Late January to early February 2017 (15+ consecutive days) - largest gap

The sensor appears to have been offline or experiencing connectivity issues during these periods. This is common in IoT sensor deployments due to maintenance, network issues, or power outages.

So, what do we do from here ...

- Interpolation: it would help for small gaps, not for days or weeks without data. There is no way to figure out what happened exactly during those days.

- Cut more data: readings < 16 looks suspicious... The sensor went down just after and there is no other period of time with similar values

- Cut & Glue: ignoring the period of times with no data... I don't like it, it could break the model. Maybe there is some rythm in the data, over long period of time

- That's all noise, don't worry: maybe that sensor is just a door sensor (binary) with a lot of noise...

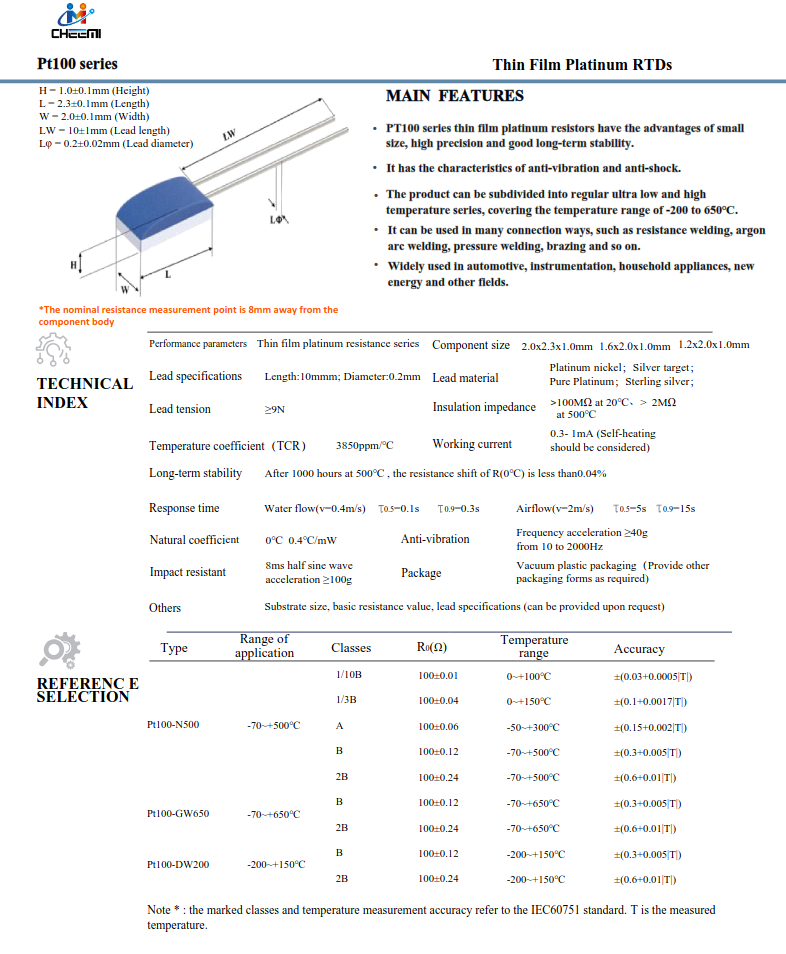

I discovered later that the sensor was a temperature sensor, a PT100. Here is the datasheet

It brings "prior knowledge":

- Depending on the model, there are limits (low and high)

- The mysterious 149.6 degrees appears to be the highest temperature in the range for some PT100 models

- Response time is not immediate, as we could see it in the "one day zoom" previously. It could help to figure out some missing readings

- ...

In [ ]: