Research > Final Project¶

Bayesian Optimization > Miximize Sharpe Ratio¶

ChatGPT Prompt: "Use Bayesian Optimization for Stock Analysis"

Response:

- Bayesian Optimization (BO) is a method for finding the best parameter values for a model when...the model doesn't have a 'closed-form' solution; evaluations are expensive (e.g. backtesting a trading strategy); fewer, smarter experiments instead of brute-fore grid/random search is desired.

- BO is commonly used to tune...Trading Strategy Parameters (SMA/EMA window lengths, MACD variations, RSI thresholds, Stop-Loss and Take-Profit Levels); Machine Learning Hyperparameters (XG Boost parameters, Neural Network architecture, GARCH model volatility parameters); Risk-Reward Optimization (Max Sharpe Ratio, Min Volatility, Max Return under constraint).

- BO builds a probabilistic model (Gaussian Process or Tree Parzen Estimator) of the objective and chooses where to test next based on expected improvement.

Bayesian Optimization Procedure

- Test a few random points

- Models the objective (Sharpe Ratio, etc.)

- Predicts where the best parameters might be

- Tests only the most promising areas

- Converges to the optimal region with far fewer evaluations

code example...

import sys

print(sys.version)

3.12.0 (tags/v3.12.0:0fb18b0, Oct 2 2023, 13:03:39) [MSC v.1935 64 bit (AMD64)]

pip install bayesian-optimization yfinance pandas numpy

# Import Libraries

import numpy as np

import pandas as pd

import yfinance as yf

from bayes_opt import BayesianOptimization

# Download Stock Price

data = yf.download("NVDA", start="2020-01-01")

close = data["Close"]

C:\Users\senna\AppData\Local\Temp\ipykernel_52276\4142185428.py:2: FutureWarning: YF.download() has changed argument auto_adjust default to True

data = yf.download("NVDA", start="2020-01-01")

[*********************100%***********************] 1 of 1 completed

# MACD Optimizer

def macd_strategy(fast, slow, signal):

# Cast to int because the optimizer passes floats

fast = int(fast)

slow = int(slow)

signal = int(signal)

# Basic sanity: make sure fast < slow, otherwise skip

if fast >= slow:

return -1e6 # punish invalid combinations

# Ensure we work with a 1D price Series (not a DataFrame)

price = close.squeeze() # if close is (n,1) DataFrame, this makes it a Series

# Compute MACD using price

ema_fast = price.ewm(span=fast, adjust=False).mean()

ema_slow = price.ewm(span=slow, adjust=False).mean()

macd = ema_fast - ema_slow

macd_signal = macd.ewm(span=signal, adjust=False).mean()

# Trading signal: long if macd > signal, short if macd < signal

signal_array = np.where(macd > macd_signal, 1, -1)

# 🔑 Flatten to 1D in case it's shape (n, 1)

signal_array = np.asarray(signal_array).ravel()

position = pd.Series(signal_array, index=price.index)

# Next-day returns

daily_returns = price.pct_change().shift(-1)

# Strategy returns: position * next-day return

strat_returns = (position * daily_returns).dropna()

if len(strat_returns) < 2:

return -1e6 # not enough data, punish

mean_ret = strat_returns.mean()

std_ret = strat_returns.std()

if std_ret == 0 or np.isnan(std_ret):

return -1e6 # avoid division by zero / NaN

sharpe = mean_ret / std_ret

# Ensure scalar float

return float(sharpe)

# Set Bayesian Optimization Search Space

pbounds = {

'fast': (5, 20),

'slow': (20, 60),

'signal': (5, 20)

}

# Run Optimizer

optimizer = BayesianOptimization(

f=macd_strategy,

pbounds=pbounds,

random_state=42,

)

# sanity check

print(type(close), getattr(close, "shape", None))

print(macd_strategy(12, 26, 9))

# optimiizer

optimizer.maximize(

init_points=5, # random exploration

n_iter=25, # number of BO steps

)

<class 'pandas.core.frame.DataFrame'> (1494, 1) 0.0015917149370049744 | iter | target | fast | slow | signal | ------------------------------------------------------------- | 1 | -0.003622 | 10.618101 | 58.028572 | 15.979909 | | 2 | -0.001716 | 13.979877 | 26.240745 | 7.3399178 | | 3 | 0.0051059 | 5.8712541 | 54.647045 | 14.016725 | | 4 | -0.012246 | 15.621088 | 20.823379 | 19.548647 | | 5 | 0.0060529 | 17.486639 | 28.493564 | 7.7273745 | | 6 | 0.0074873 | 14.177793 | 27.081731 | 9.9148254 | | 7 | -0.005907 | 12.133381 | 40.615718 | 16.804650 | | 8 | -0.004408 | 7.3045643 | 50.371981 | 13.944226 | | 9 | -0.004786 | 8.0354559 | 23.621292 | 9.7255889 | | 10 | 0.0034848 | 15.822249 | 27.928175 | 9.3486802 | | 11 | 0.0012803 | 13.936310 | 27.480764 | 11.714182 | | 12 | 0.0035931 | 15.089475 | 25.660809 | 10.245168 | | 13 | 0.0008060 | 13.122292 | 28.672044 | 9.3465059 | | 14 | 0.0007976 | 18.935903 | 27.327750 | 8.4579160 | | 15 | -0.000840 | 17.359605 | 30.395785 | 7.5158661 | | 16 | 0.0075519 | 13.041378 | 26.071989 | 10.094416 | | 17 | -0.000480 | 17.419953 | 27.617790 | 6.0054842 | | 18 | 0.0042859 | 12.893582 | 24.634095 | 11.119140 | | 19 | -0.000795 | 11.372922 | 26.406567 | 10.739794 | | 20 | -0.004228 | 13.336312 | 24.716421 | 9.3795091 | | 21 | 0.0075519 | 13.775163 | 26.230229 | 10.751048 | | 22 | 0.0028836 | 14.059227 | 25.105605 | 12.277775 | | 23 | -.549e-06 | 5.0134866 | 56.162408 | 14.032980 | | 24 | -0.002636 | 7.3132460 | 54.545115 | 13.250811 | | 25 | 0.0050410 | 13.151439 | 27.033000 | 10.241574 | | 26 | -0.001480 | 15.216094 | 27.060640 | 10.636084 | | 27 | 0.0061376 | 13.886257 | 26.298971 | 9.7739464 | | 28 | 0.0054361 | 12.959076 | 25.886019 | 11.197008 | | 29 | 0.0028308 | 16.615078 | 27.678008 | 8.0534724 | | 30 | -0.002424 | 17.453019 | 28.774511 | 9.0129120 | =============================================================

According to ChatGPT, the table above contains the following information.

- inter = interation

- target = the Sharpe Ratio of the MACD strategy (higher value = better performance relative to volatility, better risk-return profile)

- fast = fast (short-term, 12) EMA window tested by optimizer

- slow = slow (long-term, 26) EMA window tested by optimizer

- signal = signal line window (9)

# Print Best Found Parameters

print(optimizer.max)

{'target': np.float64(0.00755198127205693), 'params': {'fast': np.float64(13.041378353189243), 'slow': np.float64(26.0719891633693), 'signal': np.float64(10.094416661343582)}}

The last code prints the best Sharpe Ratio (target) generated during the optimization runs (iteration 17) and the 'fast', 'slow' and 'signal' parameters that generated it.

- Rerun with annualized values > make the resulting Sharpe Values larger and easier to interpret

- Now backtest and plot

- Compare to traditional MACD

# MACD optimization with Annualized Sharpe Ratio

def macd_strategy(fast, slow, signal):

# Cast to int because the optimizer passes floats

fast = int(fast)

slow = int(slow)

signal = int(signal)

# Basic sanity: make sure fast < slow, otherwise skip

if fast >= slow:

return -1e6 # punish invalid combinations

# Ensure we work with a 1D price Series (not a DataFrame)

price = close.squeeze() # if close is (n,1) DataFrame, this makes it a Series

# Compute MACD using price

ema_fast = price.ewm(span=fast, adjust=False).mean()

ema_slow = price.ewm(span=slow, adjust=False).mean()

macd = ema_fast - ema_slow

macd_signal = macd.ewm(span=signal, adjust=False).mean()

# Trading signal: long if macd > signal, short if macd < signal

signal_array = np.where(macd > macd_signal, 1, -1)

signal_array = np.asarray(signal_array).ravel() # flatten to 1D

position = pd.Series(signal_array, index=price.index)

# Next-day returns

daily_returns = price.pct_change().shift(-1)

# Strategy returns: position * next-day return

strat_returns = (position * daily_returns).dropna()

if len(strat_returns) < 2:

return -1e6 # not enough data, punish

mean_ret = strat_returns.mean()

std_ret = strat_returns.std()

if std_ret == 0 or np.isnan(std_ret):

return -1e6 # avoid division by zero / NaN

# Daily Sharpe

sharpe_daily = mean_ret / std_ret

# 🔑 Annualized Sharpe (using 252 trading days)

sharpe_annual = sharpe_daily * np.sqrt(252)

# Ensure scalar float

return float(sharpe_annual)

# Set Bayesian Optimization Search Space

pbounds = {

'fast': (5, 20),

'slow': (20, 60),

'signal': (5, 20)

}

# Run Optimizer

optimizer = BayesianOptimization(

f=macd_strategy,

pbounds=pbounds,

random_state=42,

)

# sanity check

print(type(close), getattr(close, "shape", None))

print(macd_strategy(12, 26, 9))

# optimiizer

optimizer.maximize(

init_points=5, # random exploration

n_iter=25, # number of BO steps

)

<class 'pandas.core.frame.DataFrame'> (1494, 1) 0.02526769128853202 | iter | target | fast | slow | signal | ------------------------------------------------------------- | 1 | -0.057507 | 10.618101 | 58.028572 | 15.979909 | | 2 | -0.027253 | 13.979877 | 26.240745 | 7.3399178 | | 3 | 0.0810550 | 5.8712541 | 54.647045 | 14.016725 | | 4 | -0.194408 | 15.621088 | 20.823379 | 19.548647 | | 5 | 0.0960870 | 17.486639 | 28.493564 | 7.7273745 | | 6 | 0.1188581 | 14.177793 | 27.081731 | 9.9148254 | | 7 | -0.093776 | 12.133381 | 40.615718 | 16.804650 | | 8 | -0.069988 | 7.3045643 | 50.371981 | 13.944226 | | 9 | -0.075990 | 8.0354559 | 23.621292 | 9.7255889 | | 10 | 0.0553204 | 15.822444 | 27.929174 | 9.3486489 | | 11 | 0.0203252 | 13.946982 | 27.486160 | 11.713781 | | 12 | 0.0570396 | 15.088161 | 25.657477 | 10.244422 | | 13 | 0.0127950 | 13.109116 | 28.660156 | 9.3533526 | | 14 | 0.0126629 | 18.933057 | 27.332209 | 8.4730437 | | 15 | -0.013347 | 17.353964 | 30.392941 | 7.5172228 | | 16 | 0.1198839 | 13.044368 | 26.070400 | 10.101693 | | 17 | -0.007629 | 17.421949 | 27.608447 | 6.0130746 | | 18 | 0.0680378 | 12.895039 | 24.641222 | 11.136283 | | 19 | -0.012635 | 11.379503 | 26.402007 | 10.755725 | | 20 | -0.067118 | 13.327823 | 24.706756 | 9.3870710 | | 21 | 0.1198839 | 13.779659 | 26.228730 | 10.758302 | | 22 | 0.0457760 | 14.076787 | 25.105925 | 12.291088 | | 23 | 0.0416774 | 5.0 | 56.064969 | 13.477997 | | 24 | -0.004194 | 5.2727304 | 54.956291 | 15.649019 | | 25 | 0.0800235 | 13.135644 | 27.036207 | 10.239986 | | 26 | -0.009862 | 6.6954698 | 54.777589 | 12.550407 | | 27 | -0.023497 | 15.248773 | 27.058751 | 10.651457 | | 28 | 0.0974315 | 13.886148 | 26.301891 | 9.7648224 | | 29 | 0.0862956 | 12.964071 | 25.896737 | 11.223886 | | 30 | 0.1532190 | 16.206568 | 28.284942 | 7.8577785 | =============================================================

# Print Best Found Parameters

print(optimizer.max)

{'target': np.float64(0.15321906859751228), 'params': {'fast': np.float64(16.206568886903206), 'slow': np.float64(28.284942912101357), 'signal': np.float64(7.857778507934423)}}

# Sanity Check

print("Annualized Sharpe for classic MACD (12, 26, 9):")

print(macd_strategy(12, 26, 9))

Annualized Sharpe for classic MACD (12, 26, 9): 0.02526769128853202

Interpreting the Outputs

Best Parameters:

Generated Sharpe Ratio = 0.127

Optimized MACD fast parameter = 14 days

Optimized MACD slow parameter = 27 days

Optimized MACD signal parameter = 10 days

Sanity Check > Classic MACD

Sharpe Ratio = 0.034

MACD fast = 12 days

MACD slow = 26 days

MACD signal = 9 days

The optimized version is better, but both results are low, because...

- a simple long/short daily strategy

- no transaction costs

- single stock over a limited time window

Now, backtest & plot...

! pip install matplotlib

import matplotlib.pyplot as plt

# define a helper backtest function

def backtest_macd(price, fast, slow, signal, name="MACD Strategy"):

# Ensure Series

price = price.squeeze()

# MACD components

ema_fast = price.ewm(span=fast, adjust=False).mean()

ema_slow = price.ewm(span=slow, adjust=False).mean()

macd = ema_fast - ema_slow

macd_signal = macd.ewm(span=signal, adjust=False).mean()

# Position: long (1) if macd > signal, short (-1) otherwise

signal_array = np.where(macd > macd_signal, 1, -1)

signal_array = np.asarray(signal_array).ravel()

position = pd.Series(signal_array, index=price.index)

# Next-day returns

daily_returns = price.pct_change().shift(-1)

# Strategy returns

strat_returns = (position * daily_returns).dropna()

# Equity curve (starting from 1.0)

equity = (1 + strat_returns).cumprod()

# Pack into DataFrame

result = pd.DataFrame({

"Price": price.loc[equity.index],

name: equity

})

return result

# run backtest with optimize MACD parameters

fast_opt = int(round(14.177793420835693))

slow_opt = int(round(27.081731781135897))

signal_opt = int(round(9.914825448824644))

print(f"Using optimized MACD({fast_opt}, {slow_opt}, {signal_opt})")

bt_opt = backtest_macd(close, fast_opt, slow_opt, signal_opt,

name=f"MACD_opt_{fast_opt}_{slow_opt}_{signal_opt}")

Using optimized MACD(14, 27, 10)

# plot equity curve

plt.figure(figsize=(10, 5))

plt.plot(bt_opt.index, bt_opt.iloc[:, 1], label=bt_opt.columns[1])

plt.title("Equity Curve - Optimized MACD Strategy")

plt.xlabel("Date")

plt.ylabel("Equity (starting at 1.0)")

plt.legend()

plt.grid(True)

plt.show()

Looks like just another price chart to me, but ChatGPT assures me that this is what it should look like. Its explanation...

Sharpe Ratio of 0.13 annualized is OK for a simple model

- equity starts at 1

- rises as high as 1.6

- declines from 2024 onwards

- by late 2025, the level is 0.55

Observations:

- the strategy did well 2020-2023

- Draws down early 2021

- Recovery through 2022

- Big surge 2023

- Starting 2024, MACD performs poorly in sideways market, Choppy high-volatility markets, Reversal heavy markets

- In 2024-2025 NVDA had repeated whipsaws (in prices). In sideways conditions, MACD flips long/short over and over...producing loses.

- Hence the 2024 declines

Important Note!! >>> MACD is a trend indicator...not predictive signal

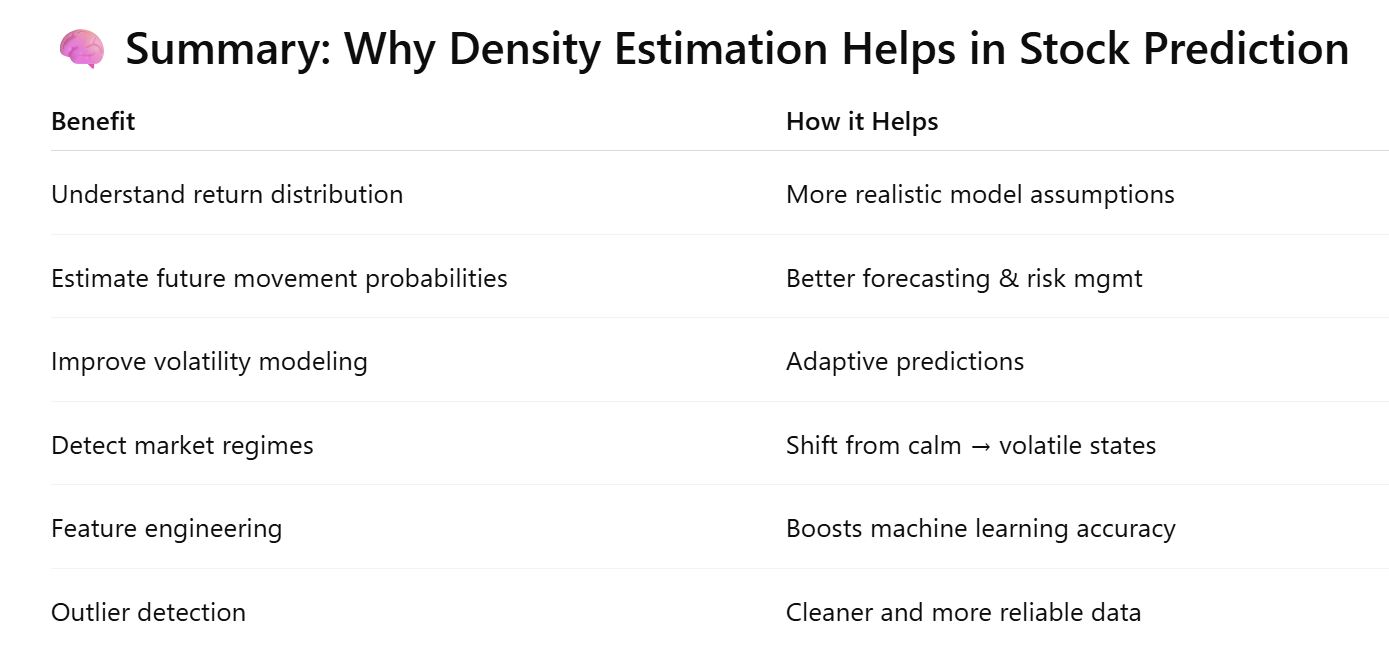

Density Estimation¶

ChatGPT Prompt: "How can Density Estimation be used to help gain insights into Stock Price Predictions"

"Density estimation is a powerful statistical tool that can help you understand the underlying distribution of stock-related variables, which in turn can improve forecasting, risk evaluation, and strategy design. While density estimation does not predict prices directly, it provides insights that make prediction models more informed and robust"

"Density estimation is a method used to approximate the probability distribution of a random variable (daily returns, volatility, trading volume, price changes"

2 Common Forms:

- Histogram (Bar Graph)

- Kernel Density Estimation (Smooth Continuous Curve)

Helping Stock Prediction¶

Help Understand Distribution of Returns

Stock returns are not normally distributed. They show:- fat tails (extreme moves more common than normal distribution predicts)

- skewness (price moves are not symmetric)

A KDE curve reveals this clearly, helping quantify the risk of large swings.

Estimate Probability of Future Price Movements

Density estimate of return calculations can be used to compute...

- Probability that tomorrow's return is > 1%

- Probability of loss > -2%

- Probability of hitting Stop-Loss or Take-Profit levels > interesting!!

Price Paths can be simulated using estimated distribution, useful for... - option pricing

- Monte Carlo simulations

- Risk management

- Algo-trading strategies

Improve Volatility Models > interesting!!

Stock Volatility has a distribution, KDE allows estimation:- Distribution of 20-day rolling volatility

- Likelihood of High-Volatility spikes

Model can adapt to periods of high or low volatility improving prediction accuracy.

Detect Regime Shifts

KDE curves change shape over time (curves of what??)- Calm Markets > narrow tall KDE

- Volatile Markets > wide, fat-tailed KDE

Sudden changes in the estimated density can signal market regime changes.

Feature Engineering for Machine Learning Models

ML models often perform better when fed features extracted from KDE rather than raw prices.

Density-based features can improve predictive power:- Tail Risk (probability in extreme ends of KDE)

- skewness/kurtosis(?)

- peak distribution zones

- density peaks near support/resistance levels

Identify Anomalies & Outliers

KDE helps find values that lie outside high-density regions.- Unusual large return

- Rare volume spike

- Excessive volatility

Outlier detection is essential for cleaning data before training prediction models

pip install numpy seaborn matplotlib

# import yahoo finance library

import yfinance as yf

nvda = yf.Ticker('NVDA') #specify stock ticker, assign to a variable

data = nvda.history(interval="1d", period="max") #pull historical data, specify interval and period

data #show data

| Open | High | Low | Close | Volume | Dividends | Stock Splits | |

|---|---|---|---|---|---|---|---|

| Date | |||||||

| 1999-01-22 00:00:00-05:00 | 0.040112 | 0.044767 | 0.035575 | 0.037605 | 2714688000 | 0.00 | 0.0 |

| 1999-01-25 00:00:00-05:00 | 0.040589 | 0.042021 | 0.037605 | 0.041545 | 510480000 | 0.00 | 0.0 |

| 1999-01-26 00:00:00-05:00 | 0.042021 | 0.042857 | 0.037724 | 0.038321 | 343200000 | 0.00 | 0.0 |

| 1999-01-27 00:00:00-05:00 | 0.038440 | 0.039395 | 0.036291 | 0.038202 | 244368000 | 0.00 | 0.0 |

| 1999-01-28 00:00:00-05:00 | 0.038202 | 0.038440 | 0.037843 | 0.038082 | 227520000 | 0.00 | 0.0 |

| ... | ... | ... | ... | ... | ... | ... | ... |

| 2025-12-04 00:00:00-05:00 | 181.619995 | 184.520004 | 179.960007 | 183.380005 | 167364900 | 0.01 | 0.0 |

| 2025-12-05 00:00:00-05:00 | 183.889999 | 184.660004 | 180.910004 | 182.410004 | 143971100 | 0.00 | 0.0 |

| 2025-12-08 00:00:00-05:00 | 182.639999 | 188.000000 | 182.399994 | 185.550003 | 204378100 | 0.00 | 0.0 |

| 2025-12-09 00:00:00-05:00 | 185.559998 | 185.720001 | 183.320007 | 184.970001 | 144719700 | 0.00 | 0.0 |

| 2025-12-10 00:00:00-05:00 | 184.970001 | 185.479996 | 182.039993 | 183.779999 | 161931100 | 0.00 | 0.0 |

6764 rows × 7 columns

# Example KDE of Daily Returns

import numpy as np

import seaborn as sns

import matplotlib.pyplot as plt

returns = np.log(data['Close'] / data['Close'].shift(1)).dropna()

sns.kdeplot(returns)

plt.title("Kernel Density Estimate of Daily Log Returns")

plt.show()

According to ChatGPT, this curve shows the probability shape of returns, which can then be used for simulation or probability estimation.

The curve shows how likely different daily returns for Nvidia have been historically.

Observations:

- Extremely tall, narrow peak at 0 return (Leptokurtic distribution)

- NVDA usually moves very little per day

- Distribution highly concentrated around zero

- similar behavior to most large tech stocks > many calm days, a few very volatile ones

- The curve is NOT symmetric...has heavier tails

- tall and narrow peak...but density spreads out much farther on both sides than normal distribution

- Nvidia has 'fat tailed' distribution

- ...more frequent large positive/negative moves that normal

- ...sensitive to earnings, news, market crashes, hype cycles, etc.

- Left tail extends further than the right...downside risk exists

- Big downside crashes have happened

- daily drops have been larger (in magnitude) than its biggest daily gains

- Nvidia has higher likelihood of sharp downward shocks

- Right tail still present...NVDA has had many large positive days

- NVDA also has huge positive rallies

- The KDE confirms that NVDA's returns are NOT Normally Distributed

- Classic Gaussian assumptions fail...since not normal distribution

- Fat Tails (a probability distribution that has more extreme outcomes than a normal Gaussian distribution would predict)

- Nvidia tails extend to -0.45 and +0.35...classic Fat Tail behavior

- Volatility clusters, regime shifts, sudden jumps...are more common for Nvidia

- For stocks...Fat Tail means extreme price moves are more common

- Implication for Prediction & Modeling

- Nvidia behaves like a two-regime asset

- Regime 1 Calm Days: small daily moves, in stable market, most trading days

- Regime 2 Volatile Days: earnings announcements, macro events, technology releases

- GMM is very appropriate for Nvidia because it can capture these 2 regimes

Definition: Skewness & Kurtosis

Skewness = asymmetry in a distribution. Zero skewness means perfect symmetry. Direction of tail.

- Negative Skew (long tail on the left)= most days are small gains, but sometimes there are large losses

Kurtosis = measures 'tailedness' or how heavy or light the tails are compared to a normal distribution. Normal distribution kurtosis = 3 (excess kurtosis = 0). High kurtosis means 'fat tails' or 'leptokurtic'. Tall peaks and heavy tails. Extreme events more likely than bell curve.

- Extreme moves happen more often than the normal distribution predicts. Size of tail.

Regime Inspection for Nvidia with Gaussian Mixture Model¶

ChatGPT had suggested that a GMM would be ideal for looking at the distribution probabilities of Nvidia's 2 distinct regimes, simplistically described as Calm Days and Volatile Days. Let's look to understand the code that ChatGPT offers up to illustrate this thesis.

pip install numpy pandas scikit-learn matplotlib --upgrade yfinance

import numpy as np

import pandas as pd

import yfinance as yf

from sklearn.mixture import GaussianMixture

import matplotlib.pyplot as plt

# Download NVDA daily data (adjust as you like)

ticker = "NVDA"

data = yf.download(ticker, start="2010-01-01") # long history

data = data.dropna()

data

C:\Users\senna\AppData\Local\Temp\ipykernel_52276\939044389.py:9: FutureWarning: YF.download() has changed argument auto_adjust default to True data = yf.download(ticker, start="2010-01-01") # long history [*********************100%***********************] 1 of 1 completed

| Price | Close | High | Low | Open | Volume |

|---|---|---|---|---|---|

| Ticker | NVDA | NVDA | NVDA | NVDA | NVDA |

| Date | |||||

| 2010-01-04 | 0.423807 | 0.426786 | 0.415097 | 0.424265 | 800204000 |

| 2010-01-05 | 0.429995 | 0.434579 | 0.422202 | 0.422202 | 728648000 |

| 2010-01-06 | 0.432746 | 0.433663 | 0.425640 | 0.429766 | 649168000 |

| 2010-01-07 | 0.424265 | 0.432287 | 0.421056 | 0.430454 | 547792000 |

| 2010-01-08 | 0.425182 | 0.428162 | 0.418306 | 0.420827 | 478168000 |

| ... | ... | ... | ... | ... | ... |

| 2025-12-04 | 183.380005 | 184.520004 | 179.960007 | 181.619995 | 167364900 |

| 2025-12-05 | 182.410004 | 184.660004 | 180.910004 | 183.889999 | 143971100 |

| 2025-12-08 | 185.550003 | 188.000000 | 182.399994 | 182.639999 | 204378100 |

| 2025-12-09 | 184.970001 | 185.720001 | 183.320007 | 185.559998 | 144719700 |

| 2025-12-10 | 183.779999 | 185.479996 | 182.039993 | 184.970001 | 161931100 |

4010 rows × 5 columns

# Compute daily log returns

data['LogReturn'] = np.log(data['Close'] / data['Close'].shift(1))

returns = data['LogReturn'].dropna().values.reshape(-1, 1) # shape (n_samples, 1)

# Fit 2-Regime GMM > Calm vs Volatile Periods

# sklearn fit distribution of NVDA returns as a mixture of 2 Gaussian Distros

# Regime 0 = Calm

# Regime 1 = Volatile

# Configuring the Model

gmm2 = GaussianMixture(

n_components=2, # 2 regimes

covariance_type='full', # full 1D covariance matrix for each regime

init_params='kmeans', # initialize cluster centers using k-means clustering

n_init=10, # 10 fitting iterations (should increase?)

random_state=42 # set random seed

)

# Fit the returns

gmm2.fit(returns)

# Regime labels and posterior probabilities

labels2 = gmm2.predict(returns) # hard cluster label specification (0 or 1) for each data point

probs2 = gmm2.predict_proba(returns) # soft probabilities for each regime for each (daily) data pont...some percentage for 1 and the other

print("2-regime means:", gmm2.means_.ravel()) # print means...'ravel' a numpy method turns array into 1D (flattened) view

print("2-regime stds :", np.sqrt(gmm2.covariances_.ravel())) # print standard deviation

print("2-regime weights:", gmm2.weights_) # print weights

2-regime means: [0.00135982 0.00161422] 2-regime stds : [0.04124314 0.01595234] 2-regime weights: [0.39139666 0.60860334]

The fit command above, does a lot according to ChatGPT. The explanation as follows:

- Runs K-means to find rough cluster centers

- Initializes 2 Gaussian distributions

- Run the Expectation-Maximization (EM) algorithm:

- E-step: computes probabilities each return belongs to each regime

- M-step: updates means, variances, weights

- Repeats until convergence

After fit, the GMM now has...

- means of each regime

- variances of each regime

- mixture weights

- log-likelihood of the fit

# Visualize Mixture vs Empirical Distribution

x = np.linspace(returns.min(), returns.max(), 1000).reshape(-1, 1)

# Mixture pdf for 2 regimes

log_prob_2 = gmm2.score_samples(x) # returns the log of the combined mixture probability distribution function...adding 2 Gaussians together

pdf_2 = np.exp(log_prob_2) # converting to probability distribution function

# log-probabilities of each component

log_probs_components = gmm2._estimate_log_prob(x) # shape (1000, 2)

comp_pdfs = np.exp(log_probs_components) # converting to probability distribution function

weighted_pdfs = comp_pdfs * gmm2.weights_ # Weight each component by its regime weight

plt.figure(figsize=(10, 5))

plt.hist(returns, bins=80, density=True, alpha=0.4, label='Empirical Histogram')

# plot mixture (combined) PDF

plt.plot(x, pdf_2, color='orange', linewidth=2, label='GMM pdf')

# plot the 2 regimes individually

plt.plot(x, weighted_pdfs[:, 0], 'r--', label='Regime 0 pdf')

plt.plot(x, weighted_pdfs[:, 1], 'g--', label='Regime 1 pdf')

plt.title("NVDA Daily Log Returns + 2-Regime GMM Fit")

plt.xlabel("Log return")

plt.ylabel("Density")

plt.legend()

plt.show()

# Visualize Regimes over Time

# assumes you already have:

# data -> DataFrame with 'Close'

# returns -> log returns (len = len(data) - 1)

# gmm3 -> fitted 3-regime GMM on `returns`

labels2 = gmm2.predict(returns) # shape (len(returns),)

idx_ret = data.index[1:] # align with returns

import matplotlib.pyplot as plt

fig, ax = plt.subplots(figsize=(12, 6))

# faint full price line in background

# ax.plot(data.index, data['Close'], color='black', linewidth=1, label='Close (background)')

# color-coded points by regime

colors = ['tab:blue', 'tab:orange']

for k, c in zip(range(2), colors):

mask = (labels2 == k)

ax.scatter(idx_ret[mask],

data['Close'].iloc[1:][mask],

s=1,

color=c,

label=f"Regime {k}")

ax.set_title("NVDA Close Price Colored by 2-Regime GMM")

ax.set_ylabel("Price")

ax.legend()

plt.tight_layout()

plt.show()

probs2 = gmm2.predict_proba(returns) # shape (n_samples, 3)

fig, ax1 = plt.subplots(figsize=(14, 6))

# ------------------------------

# 1. Regime Probability Background

# ------------------------------

regime_labels = [f"Regime {k}" for k in range(probs2.shape[1])]

ax1.stackplot(

idx_ret,

probs2.T,

labels=regime_labels,

alpha=0.6

)

ax1.set_ylabel("Regime Probability")

ax1.set_ylim(0, 1)

ax1.set_title("NVDA Price Overlaid on 2-Regime GMM Probabilities")

ax1.legend(loc="upper left")

# ------------------------------

# 2. Overlay NVDA Price (using second y-axis)

# ------------------------------

ax2 = ax1.twinx() # share x-axis, new y-axis

ax2.plot(data.index, data['Close'], color='black', linewidth=1.2, label="NVDA Close Price")

ax2.set_ylabel("Price ($)")

ax2.legend(loc="upper right")

plt.tight_layout()

plt.show()

3D visualization

# after fitting 2-regime GMM

# probs2 = gmm2.predict_proba(returns) # shape (n_samples, 2)

# idx_ret = data.index[1:] # align with returns

# prices_ret = data['Close'].iloc[1:].values # same length as probs2

# from mpl_toolkits.mplot3d import Axes3D # noqa: F401, needed for 3D

# import matplotlib.dates as mdates

#t_num = mdates.date2num(idx_ret) # numeric time for 3D plotting

from mpl_toolkits.mplot3d import Axes3D # noqa: F401

import matplotlib.dates as mdates

import numpy as np

import matplotlib.pyplot as plt

# --- align everything to returns length ---

idx_ret = data.index[1:] # index for returns

prices_ret = data['Close'].iloc[1:].to_numpy() # shape (N,)

probs2 = gmm2.predict_proba(returns) # shape (N, 2)

p_reg1 = probs2[:, 1] # shape (N,)

# convert dates to numeric for 3D

t_num = mdates.date2num(idx_ret.to_pydatetime()) # shape (N,)

# just to be safe, flatten all of them

t_num = np.asarray(t_num).ravel()

prices_ret = np.asarray(prices_ret).ravel()

p_reg1 = np.asarray(p_reg1).ravel()

print("t_num shape :", t_num.shape)

print("prices_ret shape:", prices_ret.shape)

print("p_reg1 shape :", p_reg1.shape)

t_num shape : (4009,) prices_ret shape: (4009,) p_reg1 shape : (4009,)

#3d Scatterplot with Consistent Sizes

fig = plt.figure(figsize=(12, 8))

ax = fig.add_subplot(111, projection='3d')

sc = ax.scatter(

t_num,

prices_ret,

p_reg1,

c=p_reg1, # color by probability

cmap='viridis',

s=5

)

ax.set_xlabel("Date")

ax.set_ylabel("Price ($)")

ax.set_zlabel("P(Regime 1)")

ax.set_title("NVDA – 3D View: Time, Price, Probability of Regime 1")

ax.xaxis.set_major_formatter(mdates.DateFormatter('%Y'))

fig.colorbar(sc, ax=ax, label='P(Regime 1)')

plt.tight_layout()

plt.show()

Option B

import numpy as np

n_samples, n_reg = probs2.shape

# meshgrid: time (x) × regime index (y)

T, R = np.meshgrid(t_num, np.arange(n_reg)) # T,R shape (n_reg, n_samples)

# Z needs same shape: each row = probs for one regime

Z = probs2.T # shape (n_reg, n_samples)

fig = plt.figure(figsize=(12, 8))

ax = fig.add_subplot(111, projection='3d')

surf = ax.plot_surface(

T, R, Z,

cmap='viridis',

edgecolor='none',

alpha=0.9

)

ax.set_xlabel("Date")

ax.set_ylabel("Regime index")

ax.set_zlabel("Probability")

ax.set_title("NVDA – Regime Probabilities in 3D (2-Regime GMM)")

ax.yaxis.set_ticks([0, 1])

ax.xaxis.set_major_formatter(mdates.DateFormatter('%Y'))

fig.colorbar(surf, ax=ax, label='Probability')

plt.tight_layout()

plt.show()

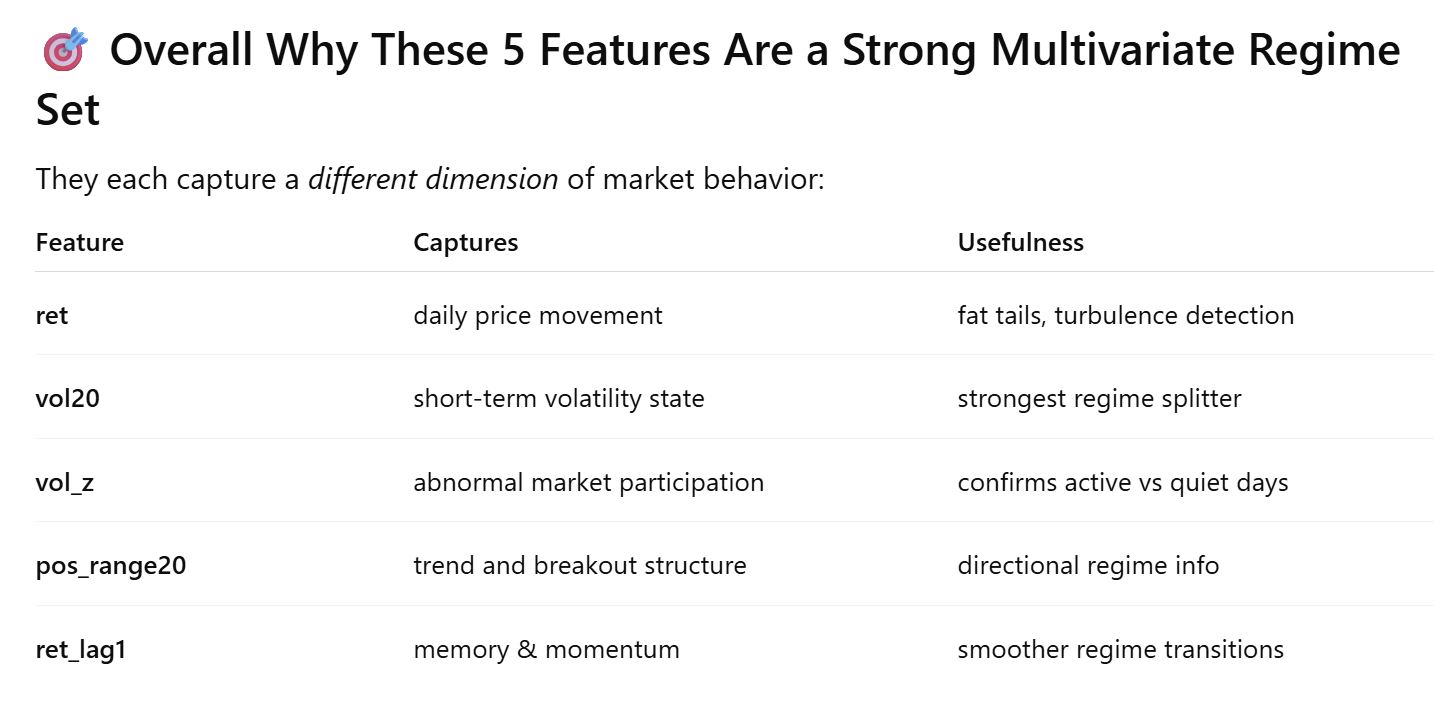

Multi-Dimensional Data Analysis¶

So far I have only looked at price return data. Neil suggest that I add 'more dimensions' to my analysis...market volatility, etc.

With too little time to get this work done, I go to ChatGPT for help.

"Go from 1D to multivariate: more features per day"

- build a feature matrix 'x' with multiple columns

- features could be...

- return

- rolling volatility

- volume surprise

- price position in range

- lagged return

- conceptually, clustering points in space

ChatGPT provides this example combining returns + volatility + volume. Each regime is defined by joint behavior of the 3 features.

! pip install scikit-learn

import numpy as np

import pandas as pd

from sklearn.mixture import GaussianMixture

X = pd.DataFrame(index=returns.index)

X["ret"] = returns

X["vol20"] = X["ret"].rolling(20).std() * np.sqrt(252)

X["vol_z"] = (

(data["Volume"] - data["Volume"].rolling(20).mean())

/ data["Volume"].rolling(20).std()

)

X["pos_range20"] = (

(data["Close"] - data["Close"].rolling(20).min())

/ (data["Close"].rolling(20).max() - data["Close"].rolling(20).min())

)

X["ret_lag1"] = X["ret"].shift(1)

X = X.dropna()

The Kernel crashed while executing code in the current cell or a previous cell. Please review the code in the cell(s) to identify a possible cause of the failure. Click <a href='https://aka.ms/vscodeJupyterKernelCrash'>here</a> for more info. View Jupyter <a href='command:jupyter.viewOutput'>log</a> for further details.

#GMM a fit on a 5D feature spaceye

gmm2 = GaussianMixture(

n_components=2,

covariance_type="full",

init_params="kmeans", # using k-means to initialize component means

n_init=10, # runs EM algo 10x with different k-means initializations...can be increased

random_state=42,

)

gmm2.fit(X) # or X.values

labels2 = gmm2.predict(X)

probs2 = gmm2.predict_proba(X)

# Plot 2 Feature GMM > Return & 20-day Rolling Volatility

import matplotlib.pyplot as plt

fig, ax = plt.subplots(figsize=(8, 6))

scatter = ax.scatter(

X["ret"],

X["vol20"],

c=labels2,

cmap="viridis", # consistent color map

alpha=0.6,

s=20

)

ax.set_xlabel("Daily return")

ax.set_ylabel("20-day annualized volatility")

ax.set_title("GMM regimes in (return, vol20) space")

# Legend with matching colors

unique_labels = sorted(set(labels2))

handles = []

for lab in unique_labels:

handles.append(

plt.Line2D(

[], [], marker="o", linestyle="", markersize=10,

label=f"Regime {lab}",

color=scatter.cmap(scatter.norm(lab))

)

)

ax.legend(handles=handles)

plt.tight_layout()

plt.show()

# With decision boundary line between 2 regime

import numpy as np

import matplotlib.pyplot as plt

# Select the two features we’re plotting

x_feat = "ret"

y_feat = "vol20"

x = X[x_feat].values

y = X[y_feat].values

# Grid over feature space

x_min, x_max = x.min(), x.max()

y_min, y_max = y.min(), y.max()

xx, yy = np.meshgrid(

np.linspace(x_min, x_max, 200),

np.linspace(y_min, y_max, 200)

)

# Build grid points in the full feature space:

# Start from mean of all features, then vary only the two we care about.

X_mean = X.mean().values

grid = np.tile(X_mean, (xx.size, 1))

# Find column indices for x_feat and y_feat

cols = list(X.columns)

i_x = cols.index(x_feat)

i_y = cols.index(y_feat)

grid[:, i_x] = xx.ravel()

grid[:, i_y] = yy.ravel()

# Get probabilities from GMM

probs_grid = gmm2.predict_proba(grid)

# difference between regime 1 and regime 0

diff = probs_grid[:, 1] - probs_grid[:, 0]

diff = diff.reshape(xx.shape)

fig, ax = plt.subplots(figsize=(8, 6))

# Scatter

scatter = ax.scatter(x, y, c=labels2, cmap="viridis", alpha=0.6, s=20)

# Decision contour where p1 = p0 -> diff = 0

cs = ax.contour(xx, yy, diff, levels=[0], linewidths=2, colors='red')

ax.set_xlabel(x_feat)

ax.set_ylabel(y_feat)

ax.set_title("GMM regimes with decision boundary")

# Legend

unique_labels = sorted(set(labels2))

handles = []

for lab in unique_labels:

handles.append(

plt.Line2D(

[], [], marker="o", linestyle="", markersize=10,

label=f"Regime {lab}",

color=scatter.cmap(scatter.norm(lab))

)

)

handles.append(

plt.Line2D([], [], color="black", linestyle="-", label="Decision boundary")

)

ax.legend(handles=handles)

plt.tight_layout()

plt.show()

c:\Users\senna\AppData\Local\Programs\Python\Python312\Lib\site-packages\sklearn\utils\validation.py:2691: UserWarning: X does not have valid feature names, but GaussianMixture was fitted with feature names warnings.warn(

#Gaussian Elippes for each Components > show covariance shapes

from matplotlib.patches import Ellipse

def draw_ellipse(mean, cov, ax, edgecolor, n_std=2.0):

# Eigen-decomposition for orientation

vals, vecs = np.linalg.eigh(cov)

order = vals.argsort()[::-1]

vals, vecs = vals[order], vecs[order]

theta = np.degrees(np.arctan2(*vecs[:, 0][::-1]))

width, height = 2 * n_std * np.sqrt(vals)

ell = Ellipse(

xy=mean,

width=width,

height=height,

angle=theta,

edgecolor=edgecolor,

facecolor="none",

linewidth=2

)

ax.add_patch(ell)

x_feat = "ret"

y_feat = "vol20"

cols = list(X.columns)

ix = cols.index(x_feat)

iy = cols.index(y_feat)

fig, ax = plt.subplots(figsize=(8, 6))

scatter = ax.scatter(

X[x_feat], X[y_feat],

c=labels2, cmap="viridis", alpha=0.6, s=20

)

# Draw ellipses per component

for k in range(gmm2.n_components):

mean_k = gmm2.means_[k, [ix, iy]]

cov_k = gmm2.covariances_[k][np.ix_([ix, iy], [ix, iy])]

color = scatter.cmap(scatter.norm(k))

draw_ellipse(mean_k, cov_k, ax, edgecolor=color, n_std=2.0)

ax.set_xlabel(x_feat)

ax.set_ylabel(y_feat)

ax.set_title("GMM regimes with Gaussian ellipses")

# Legend

unique_labels = sorted(set(labels2))

handles = []

for lab in unique_labels:

handles.append(

plt.Line2D(

[], [], marker="o", linestyle="", markersize=10,

label=f"Regime {lab}",

color=scatter.cmap(scatter.norm(lab))

)

)

ax.legend(handles=handles)

plt.tight_layout()

plt.show()

# Marginal Distributions per Regime (Histograms)

fig, axes = plt.subplots(1, 2, figsize=(12, 4))

for k in [0, 1]:

mask = labels2 == k

axes[0].hist(X.loc[mask, "ret"], bins=50, alpha=0.5, label=f"Regime {k}")

axes[1].hist(X.loc[mask, "vol20"], bins=50, alpha=0.5, label=f"Regime {k}")

axes[0].set_title("Return distribution by regime")

axes[0].set_xlabel("ret")

axes[1].set_title("Vol20 distribution by regime")

axes[1].set_xlabel("vol20")

for ax in axes:

ax.legend()

plt.tight_layout()

plt.show()

# Scatter colored by probability of regime

prob_regime1 = probs2[:, 1]

fig, ax = plt.subplots(figsize=(8, 6))

cmap = "viridis"

sc = ax.scatter(

X["ret"],

X["vol20"],

c=prob_regime1,

cmap=cmap,

alpha=0.7,

s=20

)

ax.set_xlabel("Daily return")

ax.set_ylabel("20-day annualized volatility")

ax.set_title("P(Regime 1) over (ret, vol20)")

cbar = plt.colorbar(sc, ax=ax)

cbar.set_label("P(Regime 1)")

plt.tight_layout()

plt.show()

# Time Encoding: Colors by Date, Outline by Regime

# Nice!

dates = X.index

t = np.linspace(0, 1, len(dates)) # normalized time

fig, ax = plt.subplots(figsize=(8, 6))

sc = ax.scatter(

X["ret"], X["vol20"],

c=t,

cmap="plasma",

alpha=0.7,

s=20

)

# Outline by regime

for k in [0, 1]:

mask = labels2 == k

ax.scatter(

X.loc[mask, "ret"],

X.loc[mask, "vol20"],

facecolors="none",

edgecolors="white" if k == 0 else "black",

linewidths=0.7,

s=30,

label=f"Regime {k} outline"

)

ax.set_xlabel("Daily return")

ax.set_ylabel("20-day annualized volatility")

ax.set_title("Time-colored scatter with regime outlines")

cbar = plt.colorbar(sc, ax=ax)

cbar.set_label("Normalized time (old → new)")

ax.legend()

plt.tight_layout()

plt.show()

# Pairwise Feature Scatterplot with GMM Regimes

# for sure for FP

from pandas.plotting import scatter_matrix

cols = ["ret", "vol20", "vol_z", "pos_range20", "ret_lag1"]

scatter_matrix(

X[cols],

figsize=(10, 10),

diagonal="hist",

c=labels2,

alpha=0.5

)

plt.suptitle("Pairwise feature scatter with GMM regimes", y=1.02)

plt.show()

Interpreting the above Pair-Plot¶

- shows every pair of features from the multivariate dataset > return, 20-day Rolling Volatility, Volatility Z, 20-day Rolling Position Range, Return Lag

- each diagonal cell is a histogram of a single feature

- each off-diagonal cell is a scatter plot of Feature A vs Feature B

- Purple color is one regime, yellow color is the other regime

- Insight 1: Yellow points cluster where volatility is low > yellow is calm, low vol regime

- Insight 2: Purple points spread much wider on vol20 > purple is turbulent, high vol regime

- Insight 3: Vol Z vs Vol 20 > Yellow low volatility and low volume surprise, Purple spray outward...large volume shocks cluster in high-vol regime > volume surprises correlate strongly with regime switching

- Insight 4: pos_range 20 = where price sits inside recent 20-day high/low range...yellow hugs the full 0-1 range, price oscillates in normal channels. purple clusters near mid-range but with more vertical noise > model found that range position is weakly predictive

- Insight 5: ret and ret_lag1 show both regimes overlaps heavily, daily returns alone rarely give clean clustering > multi-variate shows that no single feature give clean separation, but the combination does

Volatility is the dominant clustering driver

import numpy as np

import matplotlib.pyplot as plt

from scipy.stats import norm, gaussian_kde

# ----------------------------------------------

# Inputs: X, labels2, gmm2

# X: DataFrame with your features

# labels2: regime labels (0/1)

# gmm2: fitted GaussianMixture model

# ----------------------------------------------

features = ["ret", "vol20", "vol_z", "pos_range20", "ret_lag1"]

cols = features

fig, axes = plt.subplots(len(cols), len(cols), figsize=(14, 14))

for i, feat_i in enumerate(cols):

for j, feat_j in enumerate(cols):

ax = axes[i, j]

if i == j:

# -----------------------------------------

# DIAGONAL: HISTOGRAM + KDE + GMM DENSITIES

# -----------------------------------------

data_feat = X[feat_i].dropna()

# Histogram

ax.hist(

data_feat,

bins=40,

density=True,

alpha=0.4,

color="lightblue"

)

# ---- KDE Line (SMOOTH NON-PARAMETRIC) ----

xs = np.linspace(data_feat.min(), data_feat.max(), 400)

kde = gaussian_kde(data_feat)

ax.plot(xs, kde(xs), color="red", linewidth=2, label="KDE")

# ---- GMM Component Densities ----

comp_pdf_sum = np.zeros_like(xs)

for k in range(gmm2.n_components):

mean_k = gmm2.means_[k, i]

std_k = np.sqrt(gmm2.covariances_[k][i, i])

weight_k = gmm2.weights_[k]

# individual Gaussian component

comp_pdf = weight_k * norm.pdf(xs, mean_k, std_k)

comp_pdf_sum += comp_pdf

# optional dashed individual curve

ax.plot(xs, comp_pdf, linestyle="--", linewidth=1, color="gray")

# ---- Mixture Density Curve (sum of components) ----

ax.plot(xs, comp_pdf_sum, color="blue", linewidth=2, label="Mixture PDF")

ax.set_title(f"{feat_i}")

ax.set_yticks([])

else:

# -----------------------------------------

# OFF-DIAGONAL: SCATTER PLOT

# -----------------------------------------

ax.scatter(

X[feat_j],

X[feat_i],

c=labels2,

cmap="viridis",

alpha=0.5,

s=8

)

# Label axes on edges

if j == 0:

ax.set_ylabel(feat_i)

if i == len(cols) - 1:

ax.set_xlabel(feat_j)

plt.suptitle("Pairwise Feature Matrix with KDE + GMM Component PDFs + Mixture Density", y=1.02)

plt.tight_layout()

plt.show()

List of Features

I asked ChatGPT "provide full name and definitions and relevance of each of these features ret, vol20, vol_z, pos_range20, ret_lag1"

- Daily Logarithmic Return = measures the percentage price change from one day to the next, expressed in log form

- captures day-to-day price movement

- good for detecting heavy-tailed behavior (fat tailed distributions in high vol regimes)

- Useful input to clustering because return distribution shifts during shocks, events, and turbulence.

- Low-vol regime → return values cluster narrowly near zero

- High-vol regime → return distribution spreads out (large +/- swings)

- 20-Day Rolling Annualized Volatility = This is the classic measure of short-term volatility used in quant finance

- Volatility is the single most important driver of regime segmentation in financial markets

- High-vol and low-vol regimes are structurally different states

- spreads widen

- volume increases

- returns behave differently

- market microstructure shifts

- This feature typically provides the clearest separation between regimes

- Volume Z-Score (20-Day) = Measures how unusual today’s volume is compared to the last 20 trading days

- Trading volume spikes during:

- breakouts

- earnings

- news shocks

- panic selling

- Volume is strongly correlated with volatility, but carries independent information about market participation and order flow imbalance

- High-vol regime consistently shows elevated vol_z

- Low-vol regime → volume stays near its mean (centered around 0)

- Helps the model distinguish “quiet low-volatility days” vs. “active trending or panic days.”

- Trading volume spikes during:

- Price Position in 20-Day Range = This places the price on a 0 to 1 scale within its recent high–low range

- 0 → price at the 20-day low

- 1 → price at the 20-day high

- 0.5 → middle of the recent range

- Detects breakout vs. mean-reverting structure:

- trending regime → prices often near upper range

- fear/panic regime → price near lower range

- sideways regime → price oscillates in the middle

- Not as strong a separator as volatility or volume, but adds valuable directional context

- High-vol spikes usually pull the price out of the range → shifts model likelihood

- Low-vol periods often drift within a narrower band

- Lagged Daily Log Return (1 Day) = Just the previous day’s return

- This adds time structure into the feature space:

- Captures short-term momentum

- Captures short-term mean reversion

- Helps model transitions:

- Large negative return → more likely entering high-vol regime

- Consecutive small returns → more likely in calm regime

- While returns alone are not great for clustering, lagged information improves regime persistence (like a pseudo-HMM effect)

- If yesterday was shocking (big negative return), today’s probability of the high-vol regime typically increases

- If yesterday was calm, model likelihood shifts toward low-vol

- This adds time structure into the feature space:

Relative Strength Index (14-day)¶

Adding RSI, MACD, Market Beta

import numpy as np

import pandas as pd

import matplotlib.pyplot as plt

from scipy.stats import norm, gaussian_kde

from sklearn.mixture import GaussianMixture

import yfinance as yf

# ============================================================

# 1. LOAD MARKET INDEX (SPY for Beta)

# ============================================================

market_df = yf.download("SPY", start=data.index.min(), end=data.index.max())

market_df = market_df.reindex(data.index) # align to NVDA's index

# ============================================================

# 2. BASE FEATURES

# ============================================================

# Daily log returns (ensure 1-D Series)

ret = pd.Series(

np.log(data["Close"] / data["Close"].shift(1)).values.flatten(),

index=data.index,

name="ret"

)

X = pd.DataFrame(index=data.index)

X["ret"] = ret

# 20-day annualized volatility

X["vol20"] = X["ret"].rolling(20).std() * np.sqrt(252)

# Volume Z-score (20-day)

vol_roll = data["Volume"].rolling(20)

X["vol_z"] = (data["Volume"] - vol_roll.mean()) / vol_roll.std()

# Position in 20-day high-low range

roll = data["Close"].rolling(20)

range20 = (roll.max() - roll.min()).replace(0, np.nan)

X["pos_range20"] = (data["Close"] - roll.min()) / range20

# Lagged return

X["ret_lag1"] = X["ret"].shift(1)

# ============================================================

# 3. NEW FEATURES: RSI, MACD Histogram, Market Beta

# ============================================================

# ----------- RSI(14) -----------

delta = data["Close"].diff()

gain = delta.clip(lower=0)

loss = -delta.clip(upper=0)

avg_gain = gain.rolling(14).mean()

avg_loss = loss.rolling(14).mean()

RS = avg_gain / avg_loss

X["rsi14"] = 100 - (100 / (1 + RS))

# ----------- MACD Histogram (12, 26, 9) -----------

ema12 = data["Close"].ewm(span=12, adjust=False).mean()

ema26 = data["Close"].ewm(span=26, adjust=False).mean()

MACD = ema12 - ema26

MACDsig = MACD.ewm(span=9, adjust=False).mean()

X["macdhist"] = MACD - MACDsig

# ----------- Market Beta (60-day Rolling) -----------

market_close = market_df["Close"]

if isinstance(market_close, pd.DataFrame):

market_close = market_close.iloc[:, 0]

market_close = pd.to_numeric(market_close, errors="coerce")

# 1-D market returns and stock returns

market_ret = pd.Series(

np.log(market_close / market_close.shift(1)).values,

index=market_close.index,

name="market_ret"

)

stock_ret = ret # already a 1-D Series

window = 60

# Rolling covariance and variance (both Series)

cov_series = stock_ret.rolling(window).cov(market_ret)

var_series = market_ret.rolling(window).var()

beta60 = cov_series / var_series

X["beta60"] = beta60

# ============================================================

# 4. CLEAN DATA & FIT GMM (2 regimes)

# ============================================================

X = X.dropna()

gmm2 = GaussianMixture(

n_components=2,

covariance_type="full",

init_params="kmeans",

n_init=10,

random_state=42,

)

gmm2.fit(X)

labels2 = gmm2.predict(X)

probs2 = gmm2.predict_proba(X)

# ============================================================

# 5. PAIRPLOT WITH KDE + GMM PDFs

# ============================================================

features = ["ret", "vol20", "vol_z", "pos_range20",

"ret_lag1", "rsi14", "macdhist", "beta60"]

fig, axes = plt.subplots(len(features), len(features), figsize=(22, 22))

for i, feat_i in enumerate(features):

for j, feat_j in enumerate(features):

ax = axes[i, j]

if i == j:

# ---------------------------------------------------

# DIAGONAL: HISTOGRAM + KDE + MIXTURE PDF

# ---------------------------------------------------

data_feat = X[feat_i]

ax.hist(

data_feat,

bins=40,

density=True,

alpha=0.35,

color="lightblue"

)

xs = np.linspace(data_feat.min(), data_feat.max(), 400)

kde = gaussian_kde(data_feat)

ax.plot(xs, kde(xs), color="red", linewidth=2)

# GMM components + mixture

mix_pdf = np.zeros_like(xs)

for k in range(gmm2.n_components):

mean_k = gmm2.means_[k, i]

std_k = np.sqrt(gmm2.covariances_[k][i, i])

weight_k = gmm2.weights_[k]

comp_pdf = weight_k * norm.pdf(xs, mean_k, std_k)

mix_pdf += comp_pdf

ax.plot(xs, comp_pdf, "--", color="gray", linewidth=1)

ax.plot(xs, mix_pdf, color="blue", linewidth=2)

ax.set_title(feat_i)

ax.set_yticks([])

else:

# ---------------------------------------------------

# OFF-DIAGONALS: scatter colored by regime

# ---------------------------------------------------

ax.scatter(

X[feat_j],

X[feat_i],

c=labels2,

cmap="viridis",

alpha=0.4,

s=12

)

if j == 0:

ax.set_ylabel(feat_i)

if i == len(features) - 1:

ax.set_xlabel(feat_j)

plt.suptitle(

"Pairwise Feature Matrix with KDE + GMM Component PDFs (8-D Regime Space)",

y=1.02

)

plt.tight_layout()

plt.show()

C:\Users\senna\AppData\Local\Temp\ipykernel_52276\78685735.py:12: FutureWarning: YF.download() has changed argument auto_adjust default to True

market_df = yf.download("SPY", start=data.index.min(), end=data.index.max())

[*********************100%***********************] 1 of 1 completed

from sklearn.decomposition import PCA

# If you used scaling:

# X_input = X_scaled # numpy array

# If you did NOT use scaling:

X_input = X.values # use raw features

pca = PCA(n_components=2)

X_pca = pca.fit_transform(X_input)

print("Explained variance ratios:", pca.explained_variance_ratio_)

fig, ax = plt.subplots(figsize=(8, 6))

scatter = ax.scatter(

X_pca[:, 0],

X_pca[:, 1],

c=labels2,

alpha=0.6

)

ax.set_xlabel("PC1")

ax.set_ylabel("PC2")

ax.set_title("GMM regimes in PCA space")

handles = []

for k in sorted(set(labels2)):

handles.append(

plt.Line2D([], [], marker="o", linestyle="", label=f"Regime {k}")

)

ax.legend(handles=handles)

plt.show()

Explained variance ratios: [0.87251742 0.09891611]

regime_series = pd.Series(labels2, index=X.index, name="regime")

prob_regime1 = pd.Series(probs2[:, 1], index=X.index, name="prob_regime_1")

fig, ax = plt.subplots(2, 1, figsize=(12, 8), sharex=True)

# Price or close

ax[0].plot(data.loc[X.index, "Close"])

ax[0].set_ylabel("NVDA Close")

ax[0].set_title("NVDA price with GMM regime probability")

# Probability of regime 1

ax[1].plot(prob_regime1)

ax[1].set_ylabel("P(Regime 1)")

ax[1].set_xlabel("Date")

plt.show()

df_regime = X.copy()

df_regime["regime"] = labels2

for k in sorted(df_regime["regime"].unique()):

print(f"\n=== Regime {k} ===")

print(df_regime[df_regime["regime"] == k].describe()[["ret", "vol20", "vol_z", "pos_range20"]])

=== Regime 0 ===

ret vol20 vol_z pos_range20

count 1445.000000 1445.000000 1445.000000 1445.000000

mean 0.000543 0.563806 0.678261 0.529580

std 0.042882 0.210477 1.281086 0.373168

min -0.207711 0.129971 -2.847612 0.000000

25% -0.028635 0.404649 -0.344320 0.173616

50% -0.000918 0.563352 0.499215 0.533719

75% 0.030032 0.676657 1.550514 0.918644

max 0.260876 1.396895 4.072415 1.000000

=== Regime 1 ===

ret vol20 vol_z pos_range20

count 2545.000000 2545.000000 2545.000000 2545.000000

mean 0.002121 0.330991 -0.413406 0.631074

std 0.015511 0.103084 0.617095 0.338578

min -0.046106 0.088809 -2.602145 0.000000

25% -0.008054 0.256820 -0.820109 0.341045

50% 0.002107 0.319964 -0.485011 0.726854

75% 0.012397 0.390361 -0.029610 0.946544

max 0.048913 0.651198 1.430103 1.000000

print(type(ret))

print(ret.shape)

<class 'pandas.core.frame.DataFrame'> (4010, 1)

The Strategy¶

Nvidia's data is primary time-series data. As such, I am postulating that meaningful insight will be to search for changes in one or more variables or dimensions of the stock's data over time.

Until now, I have conducted analysis on one dimension (price returns) and over the longest timeframe (from IPO to the present). For the Final Presentation, I should:

- Conduct analysis on a consecutive series of shorter timeframes

- write a program to automate the analysis of one short timeframe after another

- ...collecting the output data from each run

- ...plotting visualization for each run

- Look at more variables and see if they might have some relationship

- Present the final series of outputs as a dynamic...moving picture or sound file...over time

Data Science Procedure

- data selection and preparation

- data analysis

- data presentation (visualization, sonification)

Idea

- for sure, i think i want to show Nvidia's analysis results dynamically

- let's say it is an animation...color and speed could be come means of expressing data dimensions...frequency (dark vs light colors), amplitude (faster or slower)

Insight Expression > Visualization/Sonification¶

Traditional visualization is primarily 2D, or when it is 3D...most often static. What if movement over time can also reveal insights? A heartbeat monitor usually shows a steady pulse rhythm, which is not so interesting. But when the rhythm accelerates or decelerates, or when the pulse is larger or smaller than normal...we pay attention. Something unusual has occurred and we need to have a closer look.

Pure Data Sonification of Images¶

image to sound in pure data

Sonification in Pure Data

Sonification Algorithm

From Data to Music