Assignment : Machine Learning¶

scikit-learn¶

I started this assignment by trying to understand the codes shared by Neil during his class. And this was my prompt.

explain this code:

from sklearn.neural_network import MLPClassifier import numpy as np X = [[0,0],[0,1],[1,0],[1,1]] y = [0,1,1,0] classifier = MLPClassifier(solver='lbfgs',hidden_layer_sizes=(4),activation='tanh',random_state=1) classifier.fit(X,y) print(f"score: {classifier.score(X,y)}") print("Predictions:") np.c_[X,classifier.predict(X)]

The response that i got form ChatGPT was:

✅ Full Code Explanation (Simple Terms)

from sklearn.neural_network import MLPClassifier

import numpy as np

You import a neural network model (MLPClassifier).

You import NumPy for handling arrays.

X = [[0,0],[0,1],[1,0],[1,1]]

y = [0,1,1,0]

X = your input data Each pair represents two inputs. Example: [0, 1]

y = your output labels Example: input [0,1] → output 1

💡 These data points represent the XOR problem:

0 XOR 0 = 0

0 XOR 1 = 1

1 XOR 0 = 1

1 XOR 1 = 0

classifier = MLPClassifier(solver='lbfgs', hidden_layer_sizes=(4), activation='tanh', random_state=1)

This creates a neural network classifier with:

solver='lbfgs' → algorithm used to train the model

hidden_layer_sizes=(4) → one hidden layer with 4 neurons

activation='tanh' → type of function in each neuron

random_state=1 → makes results reproducible

👉 This neural network will try to learn the XOR pattern.

classifier.fit(X, y)

This trains the neural network.

The model learns the relationship between inputs (X) and outputs (y).

👉 After this, the model knows how XOR works.

print(f"score: {classifier.score(X,y)}")

This prints the accuracy of the model.

Since XOR has 4 items, and neural network learns perfectly, score = 1.0 (100% correct).

print("Predictions:")

np.c_[X, classifier.predict(X)]

classifier.predict(X) → model predicts outputs for each input

np.c_[] → concatenates input and predicted output side-by-side

You get a table like:

input1 input2 prediction 0 0 0 0 1 1 1 0 1 1 1 0

👉 This shows that the neural network has learned XOR.

⭐ Super Simple Summary

You gave the neural network data for XOR.

It learned the XOR rule.

It predicts correctly.

You print its accuracy and predictions.

what is sklearn?

- Scikit-learn, often referred to as sklearn, is a free and open-source machine learning library for Python. It provides a wide range of algorithms and tools for various machine learning tasks, making it a fundamental library for data scientists and machine learning practitioners.

What is MLPClassifier?

A Multilayer Perceptron (MLP) classifier is a type of artificial neural network (ANN) used for classification tasks. It is a fundamental deep learning model that learns to map input data to output categories by training on a dataset using a process called backpropagation.

In this classifier, it is structured into layers with neurons (node) with multilayers where the nodes are connected to the hidden layer such as:

- Input Layer : Initial Data or features

- Hidden Layers : One or more layers positioned between input and output layer

- Output Layer : The final layer will produce the prediction.

Why do we have to define the input data and as well as output value ?

How does the neuron works in the neural network classifier?

X = [[0,0],[0,1],[1,0],[1,1]]

y = [0,1,1,0]

The data above indicates the input layer (X) where we will get two input as per the given dataset and the y indicates the Output Layer

classifier = MLPClassifier(solver='lbfgs',hidden_layer_sizes=(4),activation='tanh',random_state=1)

- This line creates a neural network classifier (MLPClassifier) with specific settings.

- The solver='lbfgs' chooses an optimization algorithm that works well for small datasets.

- The parameter hidden_layer_sizes=(4,) means the network has one hidden layer containing 4 neurons.

- The activation='tanh' function gives each neuron a nonlinear behavior, allowing the model to learn patterns like XOR.

- Finally, random_state=1 ensures that the model starts with the same random weights each time so results are reproducible.

Overall, this line sets up a small neural network model but does not train it; it only prepares it for training when you later call classifier.fit(X, y)

classifier.fit(X,y) is the learning step where the model studies the data and figures out the best weights to make accurate predictions based on the input and output

To know about the Algorithm, i refered to this youtube video to know more.

Youtube video i refered:

After learning what the code is then i tried to make the changes in the code.

from sklearn.neural_network import MLPClassifier

import numpy as np

X = [[0,0],[0,1],[1,0],[1,1]]

y = [0,1,1,1]

classifier = MLPClassifier(solver='lbfgs',hidden_layer_sizes=(4,3),activation='tanh',random_state=1)

classifier.fit(X,y)

print(f"score: {classifier.score(X,y)}")

print("Predictions:")

np.c_[X,classifier.predict(X)]

score: 1.0 Predictions:

array([[0, 0, 0],

[0, 1, 1],

[1, 0, 1],

[1, 1, 1]])

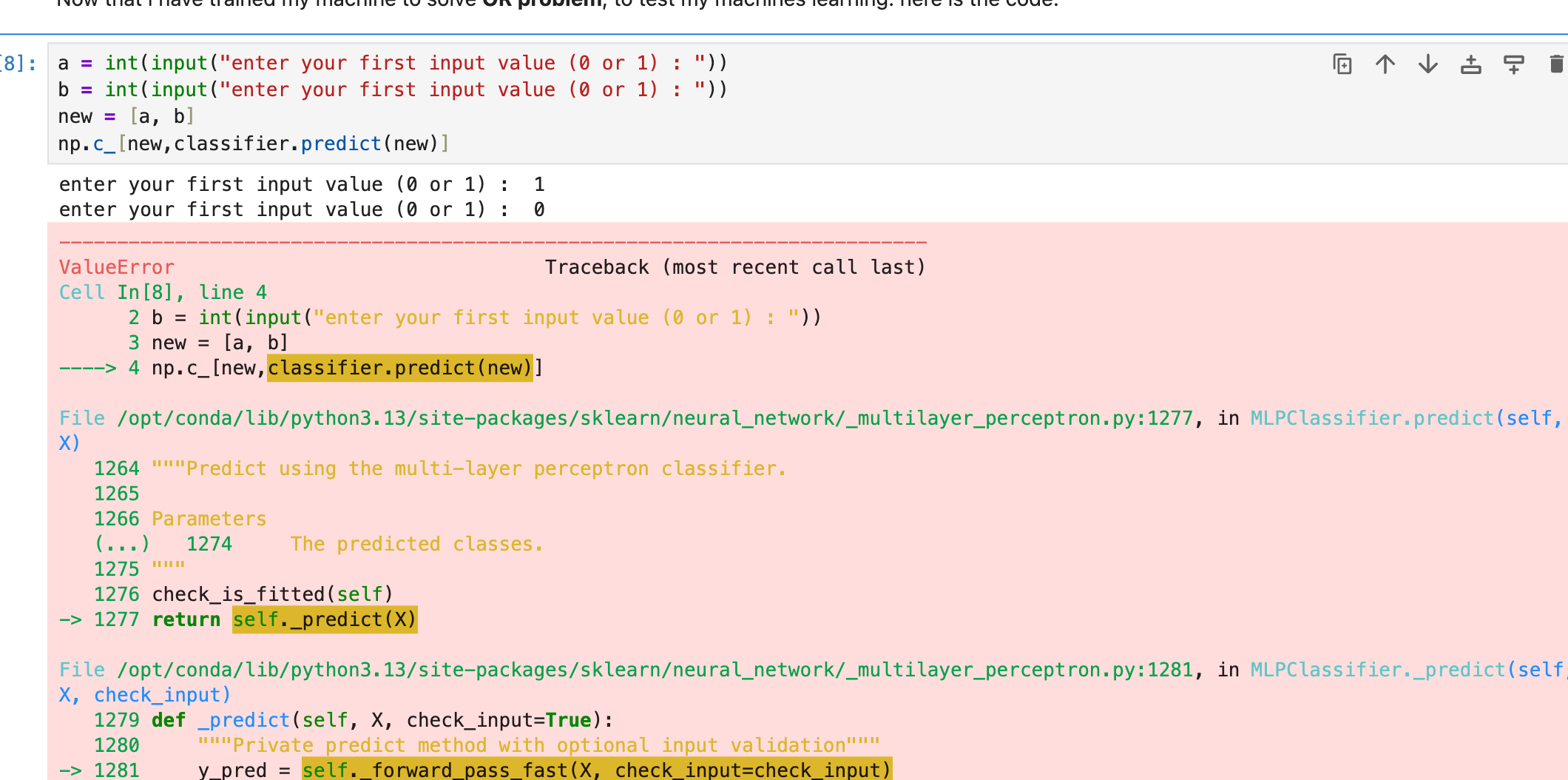

Now that i have trained my machine to solve OR problem, to test my machines learning. here is the truth table for OR, to understand the working and output for OR gate:

# a = int(input("enter your first input value (0 or 1) : "))

# b = int(input("enter your second input value (0 or 1) : "))

#new = [[a, b]]

#print(classifier.predict(new))

in the above code i will get two user input and then make a list inside a list as per the formate of the initial training data in the main code new = [[a, b]] and i will use this line for the machine to predict the output classifier.predict(new)

Error faced¶

the reason why i got this error was because i had to make sure the data input must be as a pair ([a, b]) and it must be inside the list new = [[a, b]] and then instead of using classifier same like the Neil code, I just had to call the predict method like this classifier.predict(new)

My Work¶

In the class note shared by Neil, he had trained his machine to detect hand written numbers therefore I wanted to learn how to detect dzongkha numbers. And the code shared by Neil is :

from sklearn.neural_network import MLPClassifier

feedforward neural network from scikit-learn used for classification tasks.

import numpy as np

used to handle arrays and load .npy files.

xtrain = np.load('datasets/MNIST/xtrain.npy')

xtrain → input images for training (each image is flattened into a 1D array).

ytrain = np.load('datasets/MNIST/ytrain.npy')

ytrain → labels for training images (digits 0–9).

xtest = np.load('datasets/MNIST/xtest.npy')

xtest → input images for testing the model.

ytest = np.load('datasets/MNIST/ytest.npy')

ytest → labels for testing.

print(f"read {xtrain.shape[1]} byte data records, {xtrain.shape[0]} training examples, {xtest.shape[0]} testing examples\n")

xtrain.shape[0] → number of training examples

xtrain.shape[1] → number of features per example (pixels in flattened image)

xtest.shape[0] → number of testing examples

classifier = MLPClassifier(solver='adam',hidden_layer_sizes=(100),activation='relu',random_state=1,verbose=True,tol=0.05)

solver='adam' → optimization algorithm for training (works well for large datasets).

hidden_layer_sizes=(100) → one hidden layer with 100 neurons.

activation='relu' → non-linear function for neurons (good for deep learning).

random_state=1 → reproducible results.

verbose=True → shows training progress in the console.

tol=0.05 → stopping tolerance: training stops if loss changes less than 0.05.

classifier.fit(xtrain,ytrain)

print(f"\ntest score: {classifier.score(xtest,ytest)}\n")

predictions = classifier.predict(xtest)

fig,axs = plt.subplots(1,5)

for i in range(5):

axs[i].imshow(jnp.reshape(xtest[i],(28,28)))

axs[i].axis('off')

axs[i].set_title(f"predict: {predictions[i]}")

plt.tight_layout()

plt.show()

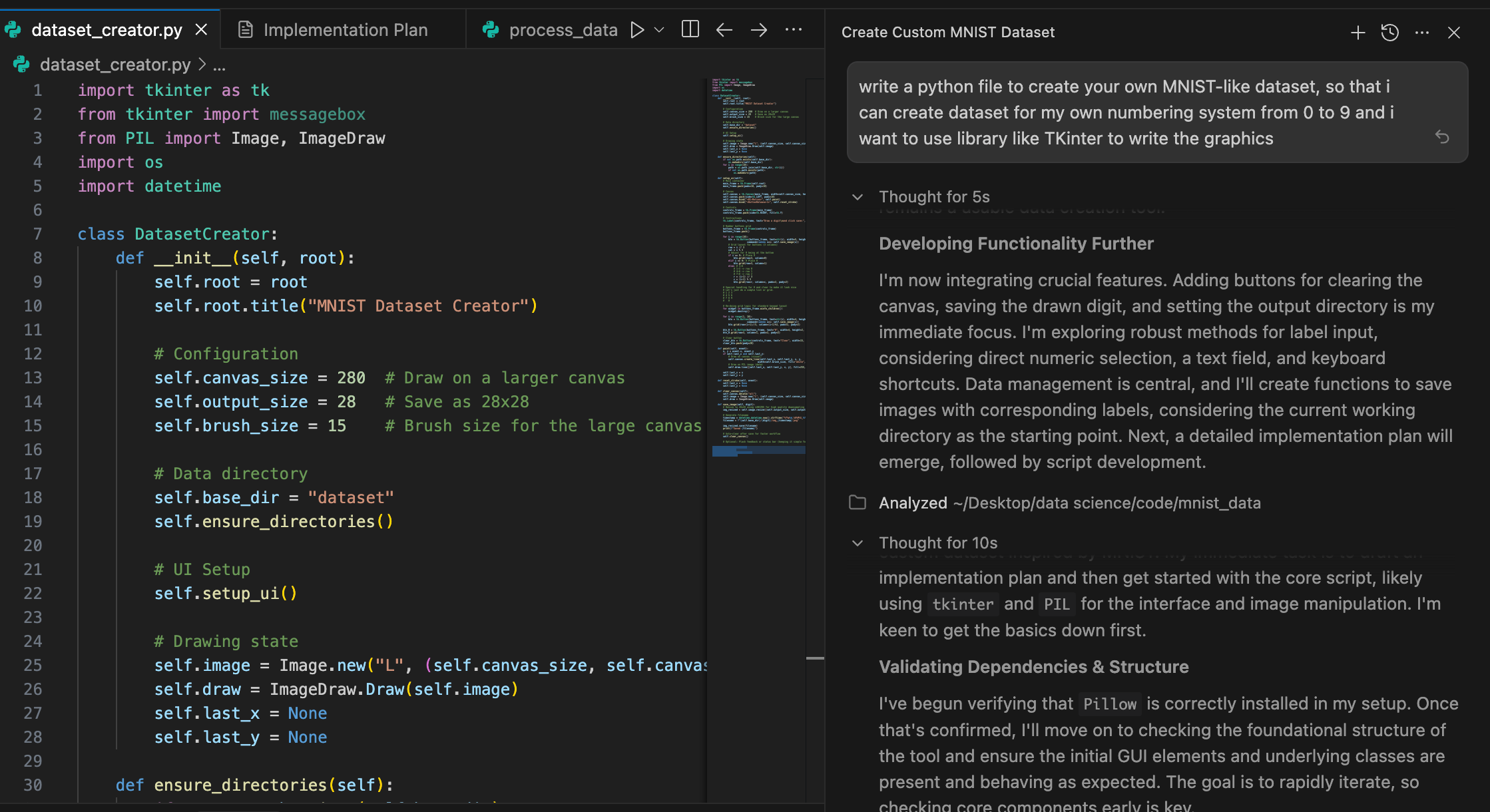

After going through the code explained by the ChatGPT, i understood that to train my machine i needed to create MNIST dataset. I also refered to the documentation by Rico

import tkinter as tk

from tkinter import messagebox

from PIL import Image, ImageDraw

import os

import datetime

class DatasetCreator:

def __init__(self, root):

self.root = root

self.root.title("MNIST Dataset Creator")

# Configuration

self.canvas_size = 280 # Draw on a larger canvas

self.output_size = 28 # Save as 28x28

self.brush_size = 15 # Brush size for the large canvas

# Data directory

self.base_dir = "dataset"

self.ensure_directories()

# UI Setup

self.setup_ui()

# Drawing state

self.image = Image.new("L", (self.canvas_size, self.canvas_size), 0) # 0 = Black background

self.draw = ImageDraw.Draw(self.image)

self.last_x = None

self.last_y = None

def ensure_directories(self):

if not os.path.exists(self.base_dir):

os.makedirs(self.base_dir)

for i in range(10):

path = os.path.join(self.base_dir, str(i))

if not os.path.exists(path):

os.makedirs(path)

def setup_ui(self):

# Main container

main_frame = tk.Frame(self.root)

main_frame.pack(padx=10, pady=10)

# Canvas

self.canvas = tk.Canvas(main_frame, width=self.canvas_size, height=self.canvas_size, bg="black")

self.canvas.pack(side=tk.LEFT, padx=10)

self.canvas.bind("<B1-Motion>", self.paint)

self.canvas.bind("<ButtonRelease-1>", self.reset_stroke)

# Controls

controls_frame = tk.Frame(main_frame)

controls_frame.pack(side=tk.RIGHT, fill=tk.Y)

# Instructions

tk.Label(controls_frame, text="Draw a digit\nand click save:", font=("Arial", 12)).pack(pady=(0, 10))

# Number buttons grid

buttons_frame = tk.Frame(controls_frame)

buttons_frame.pack()

for i in range(10):

btn = tk.Button(buttons_frame, text=str(i), width=5, height=2,

command=lambda x=i: self.save_image(x))

# Grid layout for buttons (3 columns)

row = i // 3

col = i % 3

# Adjust for 0 being at the bottom

if i == 9: # Place 9

btn.grid(row=3, column=0)

elif i == 0: # Place 0

btn.grid(row=3, column=1)

else: # 1-8

# 1-3 -> row 0

# 4-6 -> row 1

# 7-8 -> row 2

r = (i-1) // 3

c = (i-1) % 3

btn.grid(row=r, column=c, padx=2, pady=2)

# Special handling for 0 and clear to make it look nice

# Let's just do a simple list or grid.

# 1 2 3

# 4 5 6

# 7 8 9

# 0

# Re-doing grid logic for standard keypad layout

for widget in buttons_frame.winfo_children():

widget.destroy()

for i in range(1, 10):

btn = tk.Button(buttons_frame, text=str(i), width=4, height=2,

command=lambda x=i: self.save_image(x))

btn.grid(row=(i-1)//3, column=(i-1)%3, padx=2, pady=2)

btn_0 = tk.Button(buttons_frame, text="0", width=4, height=2, command=lambda: self.save_image(0))

btn_0.grid(row=3, column=1, padx=2, pady=2)

# Clear button

clear_btn = tk.Button(controls_frame, text="Clear", width=15, height=2, command=self.clear_canvas)

clear_btn.pack(pady=20)

def paint(self, event):

x, y = event.x, event.y

if self.last_x and self.last_y:

# Draw on canvas (visual)

self.canvas.create_line(self.last_x, self.last_y, x, y,

width=self.brush_size, fill="white", capstyle=tk.ROUND, smooth=True)

# Draw on PIL image (data)

self.draw.line([self.last_x, self.last_y, x, y], fill=255, width=self.brush_size)

self.last_x = x

self.last_y = y

def reset_stroke(self, event):

self.last_x = None

self.last_y = None

def clear_canvas(self):

self.canvas.delete("all")

self.image = Image.new("L", (self.canvas_size, self.canvas_size), 0)

self.draw = ImageDraw.Draw(self.image)

def save_image(self, digit):

# Resize to 28x28 using LANCZOS for high quality downsampling

img_resized = self.image.resize((self.output_size, self.output_size), Image.Resampling.LANCZOS)

# Generate filename

timestamp = datetime.datetime.now().strftime("%Y%m%d_%H%M%S_%f")

filename = f"{self.base_dir}/{digit}/img_{timestamp}.png"

img_resized.save(filename)

print(f"Saved {filename}")

# Auto-clear after save for faster workflow

self.clear_canvas()

# Optional: Flash feedback or status bar (keeping it simple for now)

if __name__ == "__main__":

root = tk.Tk()

app = DatasetCreator(root)

root.mainloop()

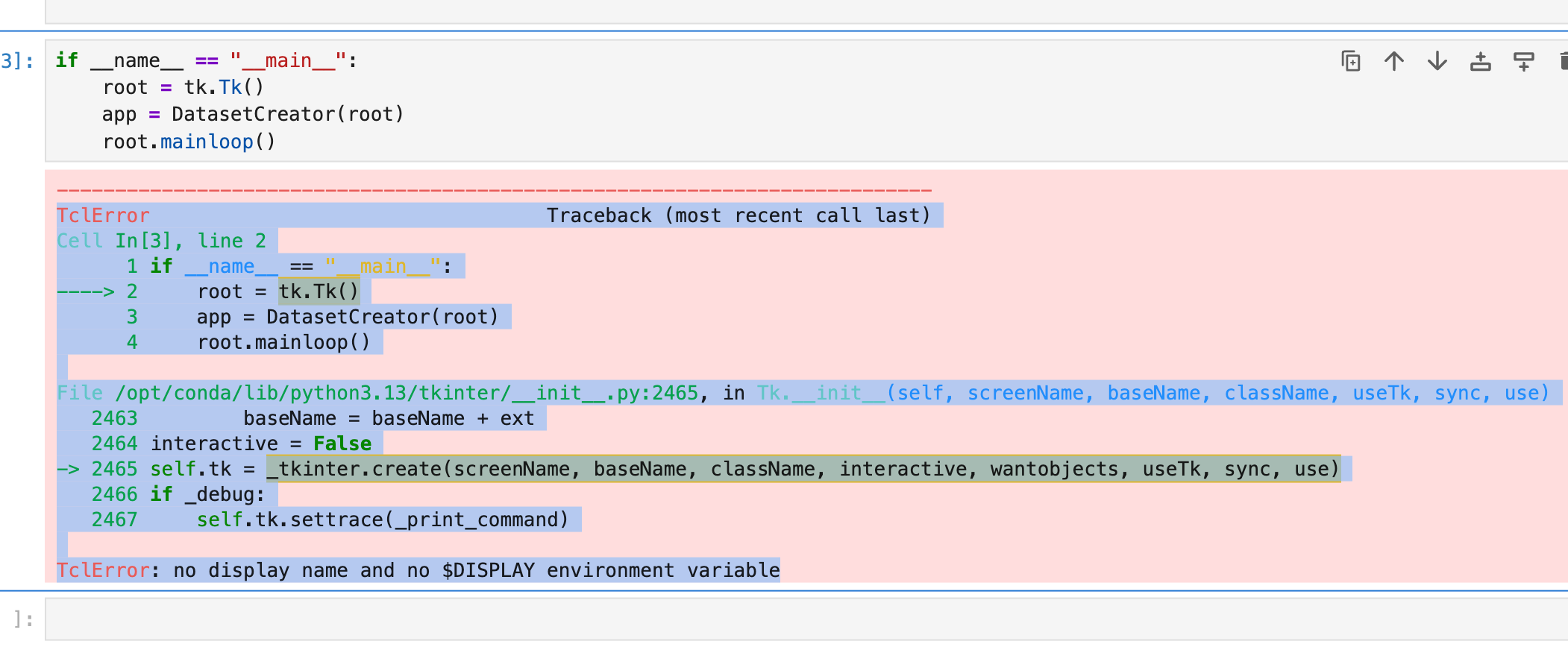

I tried to run the above code to use Tkinter to draw the Bhutanese numbering system but i got an error as shown bellow which means the display is not availabe in this juypter lab platform. therefore i will run the code in my computer and then share in the documentation.

To generate the code i used the anti-gravity IDE this tool has been really helpful because this is a IDE where AI (Gemini 3 Pro) is embedded in this IDE therefore helps me complete understand how it is thinking and how is is completing the task and it also helps me learn the codes it has generated Like mentioned below:

the prompt that i have used to generated the code so that i cna create data sets for my dzongkha numbering and i will document over the week.

from sklearn.preprocessing import StandardScaler

scaler = StandardScaler()

xtrain_scaled = scaler.fit_transform(xtrain) # fit on train only

xtest_scaled = scaler.transform(xtest)

from sklearn.neural_network import MLPClassifier

import numpy as np

# Load data

xtrain = np.load('datasets/MNIST/xtrain.npy')

ytrain = np.load('datasets/MNIST/ytrain.npy')

xtest = np.load('datasets/MNIST/xtest.npy')

ytest = np.load('datasets/MNIST/ytest.npy')

# FIX: Flatten 28x28 images → 784 numbers

xtrain = xtrain.reshape(xtrain.shape[0], -1)

xtest = xtest.reshape(xtest.shape[0], -1)

print(f"Training samples: {xtrain.shape}")

print(f"Testing samples: {xtest.shape}")

# Train model

classifier = MLPClassifier(

solver='adam',

hidden_layer_sizes=60,

activation='relu',

random_state=1,

verbose=True,

tol=0.05

)

classifier.fit(xtrain, ytrain)

print(f"\ntest score: {classifier.score(xtest, ytest)}\n")

Training samples: (228, 784) Testing samples: (58, 784) Iteration 1, loss = 29.30430607 Iteration 2, loss = 26.95845431 Iteration 3, loss = 25.07399061 Iteration 4, loss = 22.48134806 Iteration 5, loss = 19.64881547 Iteration 6, loss = 16.86414543 Iteration 7, loss = 14.16087635 Iteration 8, loss = 11.85130180 Iteration 9, loss = 9.79574780 Iteration 10, loss = 8.03793749 Iteration 11, loss = 6.60191401 Iteration 12, loss = 5.59063872 Iteration 13, loss = 4.65958918 Iteration 14, loss = 3.78445026 Iteration 15, loss = 3.06328032 Iteration 16, loss = 2.46365698 Iteration 17, loss = 2.00746127 Iteration 18, loss = 1.59987641 Iteration 19, loss = 1.27529115 Iteration 20, loss = 1.04291934 Iteration 21, loss = 0.84893504 Iteration 22, loss = 0.67118148 Iteration 23, loss = 0.53911014 Iteration 24, loss = 0.45502453 Iteration 25, loss = 0.36859596 Iteration 26, loss = 0.31774841 Iteration 27, loss = 0.27110518 Iteration 28, loss = 0.23216209 Iteration 29, loss = 0.18958377 Iteration 30, loss = 0.15351945 Iteration 31, loss = 0.12478225 Iteration 32, loss = 0.10053379 Iteration 33, loss = 0.08048907 Iteration 34, loss = 0.05917864 Iteration 35, loss = 0.04529420 Iteration 36, loss = 0.03332371 Iteration 37, loss = 0.03245813 Training loss did not improve more than tol=0.050000 for 10 consecutive epochs. Stopping. test score: 0.46551724137931033

from PIL import Image

import numpy as np

import matplotlib.pyplot as plt

# 1. Load the PNG image

img = Image.open("datasets/MNIST/lecor.png").convert("L") # convert to grayscale

# 2. Resize to MNIST size (28x28)

img = img.resize((28, 28))

# 3. Convert to numpy array

img_array = np.array(img)

# 4. Invert colors if necessary (MNIST is white digit on black background)

img_array = 255 - img_array

# 5. Flatten to (1, 784)

img_array = img_array.reshape(1, -1)

# 6. Ensure float type

img_array = img_array.astype(np.float32)

# 7. Predict with trained model

prediction = classifier.predict(img_array)

print("Predicted digit:", prediction[0])

plt.imshow(img, cmap='gray')

plt.title("Your input digit")

plt.axis('off')

plt.show()

Predicted digit: 3

Quick checklist (high priority)¶

- Scale / normalize inputs (very important for MLP).

- Reduce model capacity (fewer neurons / layers) because you have only 228 training samples.

- Add regularization (alpha) and early stopping.

- Use a validation split or cross-validation to tune hyperparameters.

- Increase training examples or use data augmentation for digits.

- Check data & labels for mistakes (wrong labels, different distributions in train/test).

Try simpler models (logistic regression, random forest) as a baseline.

Why this is happening¶

- Your training loss is tiny but test score is low → typical overfitting.

- You have a small dataset (228 samples) and a relatively large network (100 units) — too much capacity.

- Also MLPs are sensitive to input scaling and preprocessing; unscaled pixel ranges can hurt.